Building A Summarisation Chatbot Using Cohere and Telegram

Learn how to integrate Cohere Large Language Models with Telegram in the simplest way. Create a Telegram chatbot that summarises incoming text messages and return the summary to the user.

Introduction

In a previous post I wrote about the POC integration between Cohere and HumanFirst. What excited me about this POC was how the HumanFirst studio can be used to leverage and utilise a Large Language Model (LLM) from a no-code perspective.

Another good example of how a LLM can be accessed and used in a real-world scenario is Cognigy’s Marketplace for Extensions and the OpenAI extension listed in the marketplace.

To some extent, the real-world use-cases and implementations to access and use LLM’s have been a challenge to some…

This article covers the following areas:

- The most simplified code to use the Cohere summarisation functionality; with no prior model training.

- And, using the Cohere code with a Telegram wrapper.

- Lastly, accessing our Telegram chatbot from the Telegram messaging interface for an end-to-end test.

- You will need to make use of Colab to run the Cohere code and poll the Telegram API.

- You will also need to register to make use of the Telegram API.

- And register with Cohere to generate an API key.

Cohere Summarisation Examples & Code

Let’s first look at the Cohere summarisation functionality in isolation…

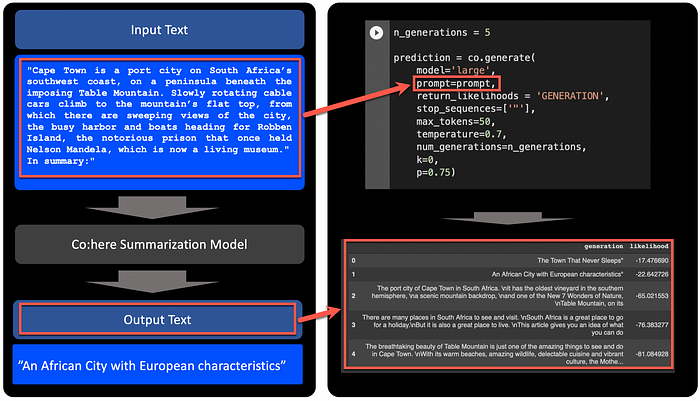

This notebook demonstrates a simple way of using the Cohere generation model to summarise text. It seems like the “In summary” key phrase is the cue for the generate model to summarise the input prompt.

In the diagram below you see the input text, and the subsequent summary generated. The snippet of code shows the settings available in terms of temperature, model used, etc.

Also below you see the output in terms of generated text and the likelihood or confidence. The output can be ranked, and this makes sense from a perspective of wanting to offer a user multiple outputs. For instance, the narrative can be, does this help…followed by this might also be helpful…

This can be seen as a form of user disambiguation, where the user is offered a few options to select from.

Below a complete notebook you can copy and paste to run the example. Notice the API Key required to run the code, you will need to head over to the Cohere website and register for free! 🙂

!pip install cohereimport cohere

import time

import pandas as pd

api_key = 'xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx'

co = cohere.Client(api_key)prompt = '''"Cape Town is a port city on South Africa’s southwest coast, on a peninsula beneath the imposing Table Mountain. Slowly rotating cable cars climb to the mountain’s flat top, from which there are sweeping views of the city, the busy harbor and boats heading for Robben Island,the notorious prison that once held Nelson Mandela, which is now a living museum."

In summary:"'''

print(prompt)n_generations = 4prediction = co.generate(

model='large',

prompt=prompt,

return_likelihoods = 'GENERATION',

stop_sequences=['"'],

max_tokens=50,

temperature=0.8,

num_generations=n_generations,

k=0,

p=0.75)# Get list of generations

gens = []

likelihoods = []

for gen in prediction.generations:

gens.append(gen.text) sum_likelihood = 0

for t in gen.token_likelihoods:

sum_likelihood += t.likelihood

# Get sum of likelihoods

likelihoods.append(sum_likelihood)pd.options.display.max_colwidth = 200

# Create a dataframe for the generated sentences and their likelihood scores

df = pd.DataFrame({'generation':gens, 'likelihood': likelihoods})

# Drop duplicates

df = df.drop_duplicates(subset=['generation'])

# Sort by highest sum likelihood

df = df.sort_values('likelihood', ascending=False, ignore_index=True)

df

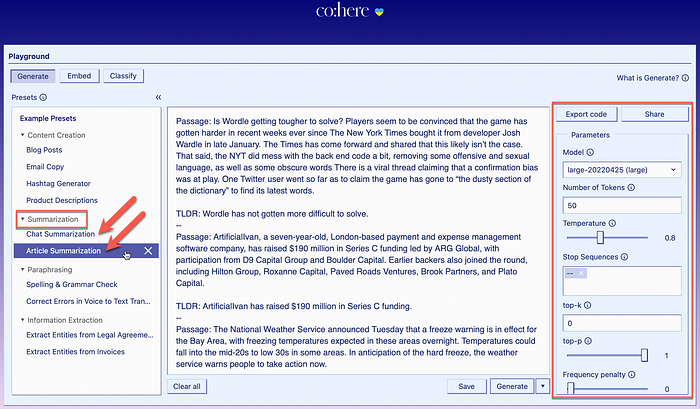

As mentioned in previous posts, Cohere has an extensive no-code Playground where you can access all their models and functionality for prototyping.

Some of the playground features and advantages are:

- It is a completely no-code interface, for easy experimentation.

- You do not need vasts amount of pre-processed data or long training times.

- The three models (generate, embed & classify) all have pre-loaded data, from where you can experiment and follow an incremental approach to building out your prototyping and experimentation.

Telegram Integration

The architecture for this Telegram summarisation chatbot is entirely contained within a Colab notebook. You will need two API keys, one for Cohere, and one for your Telegram API.

Both of these API’s are defined within the Colab code. You can start creating your Telegram Bot/API by accessing the link below…

The diagram below shows the different components, with the yellow block as the only area we are going to tinker in. The user input will be passed to your Colab notebook by the Telegram Messaging platform.

Our code within Colab will facilitate the dialog turns, of which there are only two. The Colab python code will also invoke the Cohere model and pass the results back to the Telegram Messaging platform.

Here is a snippet from the Colab notebook, block one shows the chatbot dialog turn management. Block two shows where the Cohere model is invoked.

Below is a screenshot from Telegram where you can see the conversation with the chatbot, the input is given, and the summary response is generated by Cohere.

And here is the code to run the bot end-to-end…

In Conclusion

There are various ways to use LLM’s in a conversational interface…

A chatbot can be bootstrapped by making use of search.

LLM’s are very good at performing semantic search on a piece of text, documents, etc. This is where the search is not based on key word matching, but rather on matching the meaning and intent of the text.

An alternative way of bootstrapping a chatbot using LLM’s, are to orchestrate a few elements of LLM’s to constitute the bot. These elements can include clustering, search, generation, etc.

LLM’s and NLP can also be used to support a chatbot or perform a high-pass on user input prior to the intent and entity extraction.