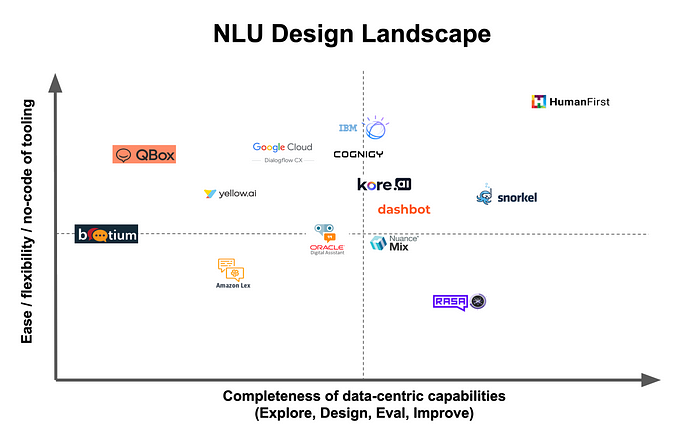

The Cobus Quadrant™ Of NLU Design

NLU design is vital to planning and continuously improving Conversational AI experiences.

Gartner recently released a report on the primary reasons chatbot implementations are not successful. The single mistake listed which accounted for most of the failures, was that organisations start with technology choices and not with customer intent.

The report stressed the importance of NLU Design as the starting point to creating a chatbot is knowing and understanding the customer’s intent in order to create a chatbot which is seamless, customer centric and above all, trusted.

Please follow me on LinkedIn for the latest updates on Conversational AI. 🙂

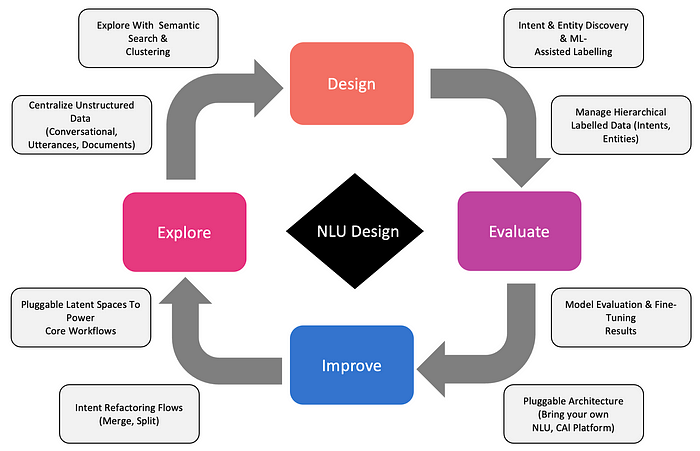

What Is NLU Design?

NLU Design is an end-to-end methodology to transform unstructured data into highly accurate and custom NLU.

While most conversational AI platforms provide intent, entity and training phrase management, successfully implementing NLU design requires a fuller suite of end-to-end data lifecycle management capabilities:

1️⃣ Explore

Centralise Unstructured Data (Conversational, Utterances, Documents). Designing highly quality NLU starts from the ground truth: unstructured data. The ability to ingest and centralise different types of data and formats (including multi-turn conversational dialogs) is critical.

Explore With Semantic Search & Clustering. Create a story or narrative from the data by creating clusters which are semantically similar.

2️⃣ Design

Human-In-The-Loop (HITL) Intent & Entity Discovery & ML-Assisted Labelling. Human-In-The-Loop training helps with the initial labelling of clusters which can be leveraged for future unsupervised clustering. Machine Assisted Labelling fast-tracks manual tasks.

Manage Labelled Data (Intents, Entities). Labelled data needs to be managed in terms of activating and deactivating intents or entities, managing training data and examples. Repurposing data taxonomies lead to optimisation and standardisation.

3️⃣ Evaluate

Model Evaluation & Fine-Tuning Results involves the ability to generate test a trained model’s performance (using metrics like F1 score, accuracy etc) against any number of NLU providers, using techniques like K-fold split and test datasets.

4️⃣ Improve

Intent Refactoring Flows (Merge, Split). Intents needs to be flexible, in terms of splitting intents, merging, or creating sub/nested intents, etc. The ability to re-use and import existing labeled data across projects also leads to high-quality data.

Please follow me on LinkedIn for the latest updates on Conversational AI. 🙂

As stated earlier, most of the assessed frameworks address the process of intent and entity labelling and management: below is an analysis of the additional NLU design capabilities they provide:

HumanFirst

HumanFirst is the only tool focused on providing the full NLU design end-to-end capabilities; with a pluggable data pipeline it allows teams to integrate different NLU providers (for model training and evaluation) as well as ability to incorporate large language models to power the core ML-assisted workflows (like semantic search & clustering).

This is one of the primary reasons I decided to join HumanFirst, for their prowess in the areas I defined in this chart.

Snorkel AI

Snorkel AI has a programatic approach to data exploration and labelling. Their focus is to accelerate time to value with a transformative programmatic approach to data labelling.

Rasa X

Rasa X serves as a NLU inbox for reviewing customer conversations, filtering conversations on set criteria and annotation of entities and intents.

Cognigy

Cognigy has an intent analyser where intent training records can be imported. With a Human-In-The-Loop approach, records can be manually added to an intent, skipped or ignored. Export and import of the Intent Trainer records are possible by date range.

Nuance Mix

Nuance Mix auto-intent functionality analyse and group semantically similar sentences. In turn these clusters can be examined by the user by accepting or rejecting entries by visual inspection.

Unfortunately, the process of detection takes a few hours and no progress bar or completion notification is available. This approach does not contribute to an approach of quick iterative improvement; given the process is not streamlined or automated, at this stage it’s hard to apply at scale.

Oracle Digital Assistant

Documents from HTML and PDF can be uploaded and intents defined. Intent names are auto-generated together with a list of auto-generated utterances for each intent. The auto-generated sentences for each identified intent reminds of Yellow AI’s DynamicNLP.

The intent name can be edited and subsequently submitted and incorporated into a skill. Read more about the intent detection functionality of Oracle here.

IBM Watson

What I like about the IBM Watson approach is the ease of supervision by the user. Data can be uploaded in bulk, but the inspecting and adding of recommendations are manual allowing for a consistent and controlled augmentation of the skill.

This is an important feature as the data is grouped into intents. And within each of these defined intents, a list is made by Watson Assistant which constitutes the user examples.

DialogFlow CX

DialogFlow CX has a built-in test feature to assist in finding bugs and prevent regressions. Test cases can be created using the simulator to define the desired outcomes.

It does seem like these tests are more focussed on the conversational side of the agent and not NLU in particular.

Kore AI

Kore AI has a batch testing facility and a dashboard displaying test summary results for test coverage, performance and training recommendations. Multiple test suites can be used for validations of intent identification capabilities of a NLU model.

Amazon Lex

The two big disadvantages of Lex V2 intent detection implementation is data size, 10,000 records are required. Added to this, data must be in a Contact Lens output files JSON format.

Dashbot

Dashbot is pivoting from a reporting tool to a data discovery tool focussing on analysing customer conversations and clustering those conversations into semantically similar clusters with a visual representation of those clusters.

Qbox

QBox focusses on analysis and benchmarking chatbot training data. Training data can be visualised to gain insights into how NLP data is affecting the NLP model.

Botium

Botium focusses on testing in the form of regression, end-to-end, voice, security and NLU performance.

Botium can also be used to optimise the quality as well as quantity of NLU training data; although I don’t have any direct experience with Botium.

Yellow AI

Yellow AI does have test and comparison capabilities for intents and entities, however it does not seem as advanced as competing frameworks like Cognigy or Kore AI.

Please follow me on LinkedIn for the latest updates on Conversational AI. 🙂

In Closing

The aim of this comparison is to explore the intersection of NLU design and the tools which are out there. Some of the frameworks are very much closed and there are areas where I made assumptions.

I would love to learn more and are open for discussion on any of the details I list in this article. 🙂

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.