Three Key Voicebot Design Considerations

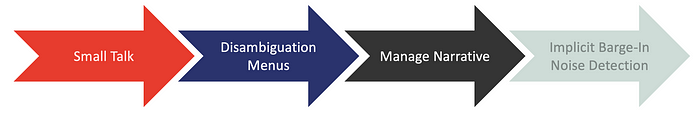

Voicebots pose a number of unique challenges as opposed to text based chatbots. The hardest problem to solve is successfully managing the dialog turn-taking in the conversation. This challenge of turn-taking needs to be considered in the light of other elements like barge-in, background noise, etc. When we as humans have a telephone conversation, there is a sequence of events. We first exchange pleasantries, then we agree on the reason for the call (intent). This is followed by a process of deciding on who goes first, and managing interrupts (barge-in).

The TL;DR

- Voicebots have the distinct disadvantage of design affordances which are invisible from a user perspective.

- Small talk needs to be planned for, as users are more prone to smalltalk on a telephone call than a chatbot interface and are in general more verbose.

- The intent of the call needs to be firmly established at the start of the call. After intent is established, the voicebot needs to take charge of the dialog, this requires accurate intent detection.

- Barge-in should not be too sensitive and must be triggered explicitly, rather than implicit.

- Detecting background noise is fairly accurate via an acoustic model and callers should rather be advised to move to a quiet place or call back later. As opposed to trying to cancel the noise.

Small Talk

Small talk is part of our day-to-day conversation process, and generally in conversations there is an introductory small talk section. This is where the user introduces themselves and often states from where they are calling.

Small talk does not need to be extensive, but basic curtesy should be built into the voicebot.

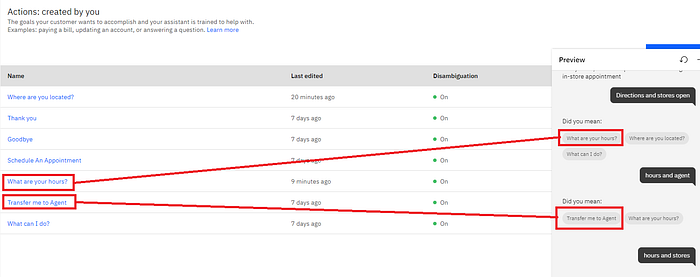

Disambiguation Menus

After small talk, the intent of the conversation needs to be established. This is the same for our human-to-human conversations where intent is established early on and hence-forth underpins the conversation.

The first step is to ask the user to state the reason for their call using only a few words.

This step could be negated if a propensity engine or some kind of business system lookup could be used to glean upfront the reason for the call.

The voicebot needs to take command of the narrative, as will be explained in the next step…however, certainty on the intent is required prior to this step.

The surest way of confirming intent is to make use of disambiguation menus.

Here is an example of how disambiguation menus can be used…say for instance someone calls the mobile operator, and states the their phone was stolen, a subsequent disambiguation menu can be presented. The menu items are all related to a phone being lost or stolen.

Hence the disambiguation menus can be seen as a theme of a collection of intents. The disambiguation menu can ask the user,

Would you like to…

~ Block your line,

~ Blacklist your device,

~ Perform a SIM swap,

or get suggestions for a new device?

Take Command Of The Narrative

Once intent is clearly established and ambiguity is removed as much as possible, the voicebot needs to take command of the narrative and the dialog turns.

Especially in a domain specific corporate implementation there will be longer customer procedures to navigate, hence a fixed sequence of events the user needs to go through.

Barge in should be explicit and not implicit, as implicit barge-in can lead to random noises, the user coughing, etc breaking the dialog flow and are seen as a barge-in.

An explicit barge-in is often when the user says something which is unrelated to the current dialog turn. At this juncture the user can be asked of they would like to repeat their input, or end the current process and talk about something else…

Acidental Noise Detection

For the process of converting speech into text, automatic speech recognition, an acoustic model can be trained on a sample of customer utterances. Something which I have found in my experience, is that an acoustic model significantly improves the accuracy of transcribing voice to text.

Something else I have found (by accident), was that the acoustic model translated noise in a very specific way. The phrases transcribed for noise were consistent enough to advise the user to move to a quiet spot or call back later.

In Closing

Solving for conversational turn-taking and barge-in will be difficult without elements like:

- Gesture Recognition

- Lip Activity Detection

- Object Detection

- Gaze Detection

However, making use of the design principles I list in this article will go a long way in improving a voicebot’s NPS, CSAT, containment and problem resolution rate.