Five Approaches To Managing Conversational Dialog

And Which Elements Can Play A Supporting Role

Introduction

When building a chatbot, developing, managing and fine-tuning the dialog flow state is important. Certain conversational AI elements are really intended to be used as the backbone of the dialog, others should be used in a supporting capacity, or only for specific use-cases.

There are 5 broad approaches to managing a conversation:

- Intents, Entities, Dialog State Systems & Bot Messages

- ML Stories

- Chitchat

- Large Language Models

- Knowledge Base Management

1️⃣ Intents, Entities, Dialog State Systems & Bot Messages

This is the stock-standard approach to digital assistants or chatbots. Intents at the frontline, sometimes fronted by a high-pass Natural Language Processing (NLP) layer for sentence boundary detection, summarisation, classification, keywords extraction, etc. Followed by entity extraction, and state based / condition based dialog management.

🟢 Advantages:

- Scales well as functionality and scope are added.

- Easy to manage and the standard, the Gartner leaders are leaning towards.

- Design and development of conversational experiences are merging.

- The Dialog State machine makes it easy to have a message abstraction layer for management of bot responses based on the conversational medium.

- Due to the ubiquitous nature of this architecture, skilled and experienced professionals are available.

- This architecture makes it easier to segment the development process for parallel work, to some degree.

- Leads towards a more controlled and predictable user experience.

- Fine-tuning is baked into the architecture.

🔴 Disadvantages:

- The conversational experience is not flexible and still has the underlying state machine. Having some kind of probabilistic classifier for predicting the next dialog turn will be helpful. This can add flexibility to some areas of the conversation.

- Large knowledge bases cannot be natively absorbed without much work in segmentation data preparation.

- Incorporation of Large Language Models (LLM’s) are not yet commonplace from a dialog perspective. Regarding LLM’s, ODA does have a trainable auto-complete feature.

2️⃣ Machine Learning Stories

The ideal would be to have a probabilistic classifier of sorts, observing the user input and replying with the most appropriate message. And where a rigid set of steps are required, like opening an account, a sequential set-states approach can be followed. Rasa, with their ML Stories also have a Rules approach. This is a type of training used to train where short pieces of conversations are described, which should always follow the same sequence.

🟢 Advantages:

- ML Stories can generalise to unseen conversation paths.

- A natural conversation can be followed, users can digress and move from one conversation to another.

- The conversational agents’ whole domain is leveraged for the conversational experience where different user conversations can be submitted for training with more possible subsequent scenarios.

- Conversational designs or flows can be seen as disposable. If not used, it is not developed further and user stories which are in demand can be built out.

- There is an element of control and fine-tuning with stories, which is not completely the case with the zero or few shot chatbots of LLM’s.

- For enterprises, the vast majority of use-cases for chatbots are really limited to a few examples, ML stories can focus on these whilst catering for all the deviations.

🔴 Disadvantages:

- The probabilistic approach are seen as risky by enterprises and large organisations.

- It is a new way of working for teams, currently much emphasis is placed on the conversational design and development canvas approache and graphic collaboration.

- Chatbot testers need to understand the context of stories; misalignment might be a challenge.

- Steep learning curve.

3️⃣ Chitchat / Smalltalk

Many chatbot implementations cater for chitchat implementations.

This is were smalltalk is incorporated into the chatbot, which includes basic curtesy. And the handling of errors and exceptions gracefully and in a highly conversational manner.

Simple ways of improving general chitchat is to focus on not repeating messages or allow any form of fallback proliferation. Identifying users can help in creating context for conversations.

Of course chitchat is just an aid in managing the conversation, in most cases. There are instances where smalltalk can support the whole conversation, but these are general, companion, non-domain specific chatbots.

If a chatbot was developed in a specific minority language, than out-of-the-box chitchat will most probably not be available.

🟢 Advantages:

- Chitchat makes the conversational agent more natural.

- Also, chitchat can be used to make the conversation more personalised in instances where users can be identified.

- A large part of first-interactions of any conversational agent is people just exploring the interface and asking general and often random questions. If these can be fielded and the conversation managed, first-impression can be positive.

🔴 Disadvantages:

- Setting the boundaries of chitchat can be challenging, can users ask for the weather, the time etc?

- Domain specific conversational implementations must not be confused by users with general, broad domain, implementations like Google Assistant, Siri, Alexa and the like.

- Care must be taken to leverage chitchat in such a way that the conversation gain traction in order to extract definite intents.

- As stated before, developing chitchat is time consuming and defining what will most probably be chitchat even more. Leveraging chitchat from LLM’s is a possibility.

4️⃣ Large Language Models (LLM)

Large Language Models (LLM) have a whole array of implementations with which the dialog of a conversational agent can be created. LLM’s give a large degree of flexibility, with zero to few shot training. In other words, much can be achieved with no to very little training data or effort. This flexibility is astounding at first and the implementation possibilities flood one’s mind.

However, flexibility without control does not scale well and fine-tuning is crucial for any implementation. The LLM’s do have a degree of fine-tuning available, but does not include fine-tuned dialog managment.

LLM’s include OpenAI’s Language API, co:here, AI21labs and HuggingFace.

LLM’s are exceptional at any langauge task, including Natural Language Generation. LLM’s can also play a vital role in supporting a chatbot implementation.

🟢 Advantages:

- Zero to few shot training.

- Multiple language related tasks can be performed.

- LLM’s can play a vital supporting role in chatbots. For instance extracting named entities, summarisation, classification of text and NLG.

- Democratising access to Large Language Models and powerful processing.

- No-code, cloud based approach, which can grow into low-code and eventually pro-code.

- Training data does not have to be extremely large or specific formats.

🔴 Disadvantages:

- Cost is both and advantage and disadvantage. Initially cost will be low, but as volume and functionality are added, cost will escalate.

- Fine-tuning will be a challenge; training on custom and own data.

- LLM’s can maintain a coherent and general dialog maintaining context, with NLG and no repetition, etc. But programming the dialog is not possible.

5️⃣ Knowledge Base Management

Firstly, one could address Questions and Answering via alternative approach, other than traditional Knowledge Bases. Doing so via traditional chatbot development affordances; intents, entities, dialog trees and response messages.

🟢 Advantages:

- The process and approach form part of existing chatbot development process.

- Ease of integration with existing chatbot journeys, and act as an extension of current conversational functionality.

- QnA experiences can be transformed into an integrated journey.

🔴 Disadvantages

- Maintenance intensive in terms of NLU (intents & entities), dialog state management and dialog management.

- Does not scale well with large amounts of dynamic data.

- Semantic search is more adept to finding one or more matching answers.

A second level is where a custom knowledge base is setup. This can be done via various means, Elasticsearch, Watson Discovery, Rasa knowledge base actions, OpenAI Language API with fine-tuning or Pinecone.

The second level knowledge bases are focussed and aimed at domain specific search data, and loading or making searchable data available.

A challenge with this level 2 knowledge base is to have an effective message abstraction layer. Response messages should be flexible, a portion of a response might be more appropriate for a specific question. Or, there might be a need for two or more messages to be merged for a more accurate response.

🟢 Advantages:

- Scales well with large bodies of data which changes continuously.

- Lower maintenance as the incorporated search options takes care of data retrieval.

- Advances in Semantic Search, vector databases and more.

- Knowledge bases negate chatbot fallback proliferation by most probably having a domain related answer to the question.

🔴 Disadvantages:

- More demanding in terms of technical skills.

- Cost might be a consideration.

- An additional dimension is added to the Conversational AI landscape to manage.

Lastly, a third level, could be seen as instances where general, non-domain specific questions can be asked. And where a vast general knowledge base needs to be leveraged. This can be Wikipedia, GPT3, etc.

OpenAI’s Language API does a good job at fielding any general knowledge questions in a very natural way with no dialog or messaging management. In the image below general random questions are fielded in short, well-formed sentences.

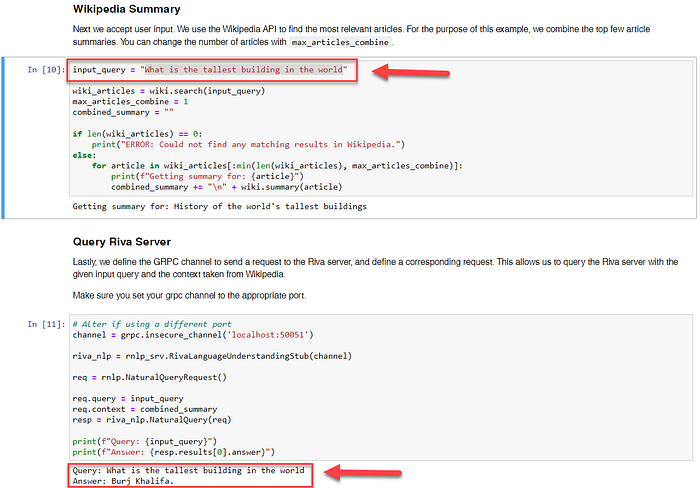

NVIDIA Riva has a general Question and Answer chatbot where Wikipedia is leveraged, as seen from the Notebook example below.