With Conversations Alexa Wants To Solve Dialog State Management

A Number Of Chatbot Design Principles Can Be Gleaned From This Approach

Introduction

Often with a new technology or a new approach to an old problem, it’s good to try and understand what the underlying principles employed to solve the problem.

Alexa Conversations (AC) is an attempt from Amazon to move past single dialog turn voice commands. And allow users to have longer, more natural, multi-turn conversations.

AC does two things:

- Deprecates the normal approach of intents fronting the conversation and segmenting each conversation according to a defined intent.

- Secondly, and perhaps most importantly, AC deprecates the dialog flow state machine.

Traditionally, having longer conversations per session and multiple dialog turns in turn necessitates a myriad of dialog states connected and each dialog node accessed only based on a set of conditions.

Most voice interface, and specifically with Alexa, is very much single dialog turn command and control applications. The ambition is to make Alexa skills into multi-turn and conversation is a good approach.

There is an argument for speech applications not to be too conversational, just for the sake of being conversational.

The ideal is to have contextual awareness for each conversation; this level of contextual awareness will vary per call. But as much as possible. The level of contextual awareness allows for the exposure of the smallest and most applicable conversational surface to the user. Contributing to efficiency and enhancing the user experience.

Alexa Conversations Objectives

The objectives of Alexa Conversations are:

- Use an AI Model to bridge the gap between conversations built manually and the vast range of possible conversations which need to be made provision for.

- Supply sample conversations sets, and AC AI extrapolates the spectrum of phrasing variations and dialog paths.

- Developers do not need to identify & code every possible way users might converse.

- The AI model creates the permutations and handles dialog state management, context carry-over, and corrections.

- Solving for unanticipated conversational paths.

Functions includes are:

- State management — Selects and renders Alexa speech prompts to guide the user to the next state.

- Dialog variations — Asks the user follow-up questions to gather missing information.

- User-driven corrections — Handles the user changing their mind.

- Context carry-over — Updates an option without needing the user to repeat the other options.

The Good

- The advent of compound slots/entities which can be decomposed. Adding data structures to Entities.

- Deprecating the dialog state machine and creating an AI model to manage the conversation.

- Making voice assistants more conversational and multi-turn dialog conversations.

- Contextually annotated entities/slots.

- Error messages during the building of the model were descriptive and helpful.

The Not So Good

- It might sound negligible; but building the model takes a while. I found that the errors in my model was surfaced at the beginning of the model building process, and training stopped. Should your model have no errors, the build time is long.

- Defining utterance sets are cumbersome. Creating utterance sets for a large implementation with a large number of slots/entities is not ideal.

- It is complex, especially compared to an environment like Rasa. The art is to improve the conversational experience by introducing complex AI models; while simultaneously simplifying the development environment.

- Above, a single and very simple dialog set (one of multiple dialog sets) with its conversation example. Dialog sets need to represent example conversations.

- Alexa Conversations only works for English (US) skill.

Challenges & Considerations:

- Fine-tuning can be problematic and I would advise against AC in isolation or standalone for a more complex implementation.

- AC can be used to facilitate all or part of the dialog management for a skill. And the dialog can switch between traditional intent-based dialog management and Alexa Conversations.

- For larger implementations the sets of example dialogs can become quite extensive also, each with multiple examples.

- A single dialog set cannot end on an user utterance. It needs to end on an Alexa Utterance.

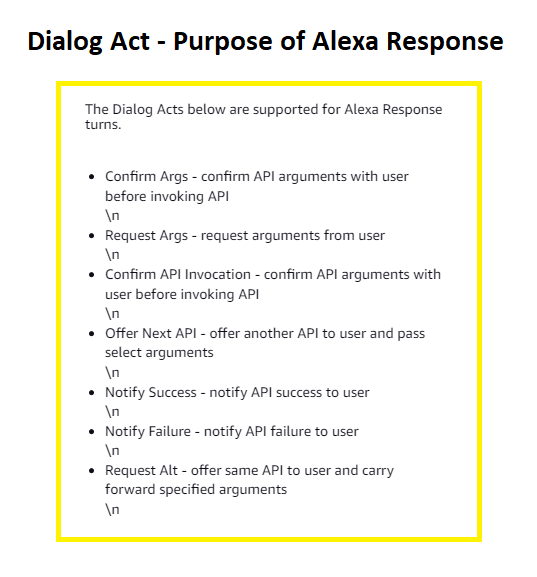

- Each dialog must contain API integration (variable/argument management) and each dialog turn must be linked to a Dialog Act. The dialog acts are listed below, these are all related to the arguments.

- When you configure a skill for Alexa Conversations, you must provide API definitions for all APIs that Alexa Conversations can call during the conversation between the user and Alexa. API definitions specify the requests that your skill code can handle.

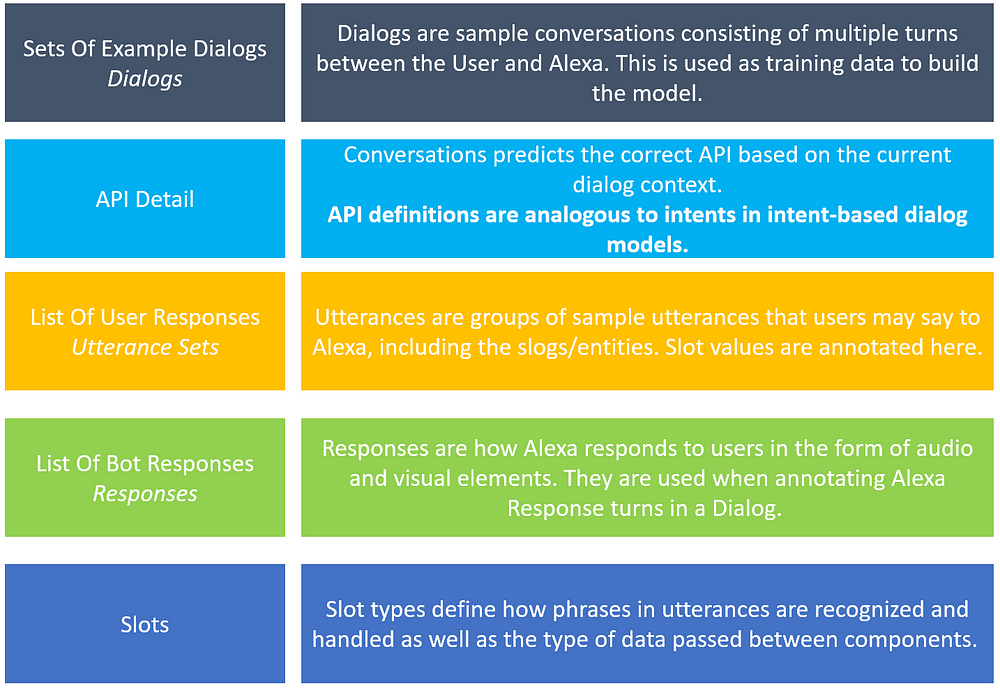

- As the user interacts with your skill, Alexa Conversations predicts the correct API based on the current dialog context. API definitions are analogous to intents in intent-based dialog models.

Architecture

Alexa Conversations (AC) is constituted by an architecture which is underpinned by 5 pillars. These 5 pillars are listed below…

Threading variables between the components entails the assigning and re-assigning of these variables. Errors made here is a major cause of build errors and simplification in this department will go a long way.

Dialogs

Multiple sets of dialogs need to be defined. Each dialog is an example of a representative customer or user conversation.

Below is the AddCustomPizza dialog with the defined slots. The dialog turns between the user and Alexa. And the Dialog Act is defined on the right.

The image below shows the simples dialog, in capturing a favorite color.

API Detail

When you configure a skill for Alexa Conversations, you must provide API definitions for all APIs that Alexa Conversations can call during the conversation between the user and Alexa. API definitions specify the requests that your skill code can handle.

As the user interacts with your skill, Alexa Conversations predicts the correct API based on the current dialog context. API definitions are analogous to intents in intent-based dialog models.

Utterance Sets

This is again groupings of example user utterances. These utterances can be annotated with the entities/slots. The utterance also needs to be linked to one of the the Dialog Acts mentioned earlier.

Responses

These are the audio and visual responses based on the access device.

Slots

Slot types define how phrases in utterances are recognized and handled as well as the type of data passed between components.

Conclusion

Alexa Conversations is a move in the right direction, deprecating the dialog state management machine with a AI model based on conversational data.

The conversational data components are threaded together with variables/slots which need to be populated. With focus on collecting data, confirming the data and feeding results back.