Is Chatbot Dialog State Machine Deprecation Inevitable?

And How Might The Best Approach Look…

Introduction

In simple terms, the idea behind a machine learning model, is to receive data and return data. What should make it different is that the data input and output is not an one-to-one match.

The model should be able assess the data received from the user and in turn respond with data which best matches the user input.

Hence there is a process of assessment and a list of best fits are created with varying confidence scores.

The ideal for a Conversational AI implementation is to have this level of flexibility throughout the different layers of the technology stack.

Where a user can give input to the chatbot, and a best-fit response is given by the chatbot. With each dialog turn being based on the best probable next step in the conversation. While maintaining context and continuity throughout the conversation.

The three chatbot architecture elements which need to be deprecated at some stage are state machine dialog management ,intents and bot responses.

This is often referred to as a level 4 & 5 conversational agent, offering unrestricted compound natural language interactions.

Obviously within the implementation domain of the chatbot.

The current chatbot status quo sits between keyword recognition and structured intent and entity matching.

But what is a level 4 & 5 chatbot?

General Observations on Level 4 & 5 Chatbots

Rasa describes a level 4 chatbots as follows:

A Level 4 assistant will know you much more in detail. It doesn’t need to ask every detail, and instead quickly checks a few final things before giving you a quote tailored to your actual situation.

And, more than a super personalized experience, we want to introduce a chatbot where the user can have a:

- completely natural

- unstructured conversation

- and where the conversation is dynamic.

Practically, to have a true Level 4 & 5 chatbot or conversational agent, the layers of constraint and rigidity need to be removed. In other words, the rigid layers which introduce this straight-laced approach requires deprecation.

One can say that traditionally chatbots, or conversational AI agents, are constituted by a four pillar architecture.

This architecture is very much universal across commercial chatbot platforms; being:

- Intents

- Entities

- Bot Responses (aka Script / Bot Dialog)

- Dialog Sate Machine Management

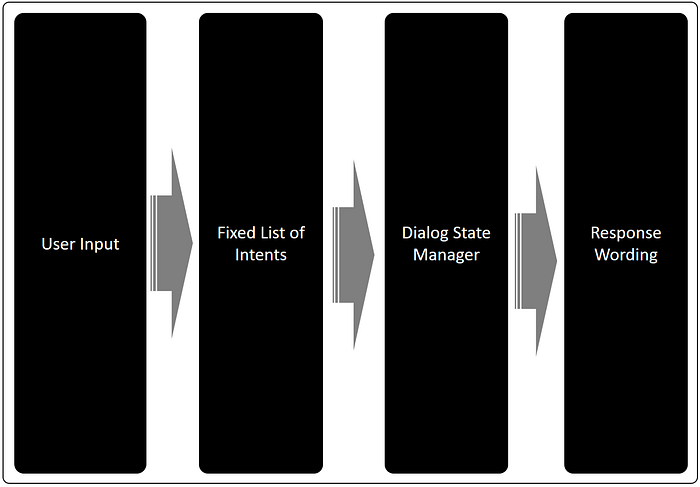

The Four Pillars Of Traditional Chatbot Architecture. For a level 4/5 chatbot, the rigidity in Intents, Bot Responses and Dialog State Management need to be removed. As also shown in the image below…

Allow me to explain…

As seen here, there are two components; the NLU component and the Dialog Management component. In the case of Microsoft, Rasa, Amazon Lex, Oracle etc. the distinction and separation between these two is clear and pronounced. In the case of IBM Watson Assistant, it is not the case.

The NLU component is constituted by intents and entities. And the Dialog component by bot responses and the state machine.

The three impediments to chatbots becoming true AI agents are intents, state machines and bot responses.

The three areas of rigidity are indicated by the arrows, as discussed here.

- For example, a user utterance is mapped to a single predefined intent.

- in turn, a single intent is assigned to an entry point within a rigid state machine for the bot to respond.

- The state machine has a hardcoded response to the user for each conversational dialog node. Sometimes the return wording has variables imbedded to have some element of a tailored response.

Intents can be seen as verbs or user intents and entities as nouns (cities, dates, names etc.).

So it is clear with these three levels of rigidity, progress to levels 4 and 5 are severely impeded.

The ideal scenario is where the user input is directly matched to a machine learning story which can learns and adapt from user conversations.

Breaking down the rigidity of current architecture where machine learning only exist in matching user input to an intent & entities.

1. Conversation State Management

Whilst the NLU Model is a machine learning model, where there is a sense of interpretation on the NLU model’s side of the user utterance, and intents and entities are assigned to the user input…

The user utterance is assigned to an intent. In turn the intent is linked to a particular point in the state machine.

…even though the model was not trained in that specific utterance…

This is not the case with the Conversation State Manager, also referred to as the dialog flow system.

In most cases it is a decision-tree approach, where intents, entities and other conditions are evaluated in order to determine the next dialog state.

The user conversation is dictated by this rigid and pre-determined flow with conditions and logic activating a dialog node.

Here Rasa finds itself alone in this category; invented and pioneered by them. Where they apply ML, and the framework calculates the probable next conversational node from a basis of user stories.

stories:

- story: collect restaurant booking info # name of the story - just for debugging

steps:

- intent: greet # user message with no entities

- action: utter_ask_howcanhelp

- intent: inform # user message with no entities

entities:

- location: "rome"

- price: "cheap"

- action: utter_on_it # action that the bot should execute

- action: utter_ask_cuisine

- intent: inform

entities:

- cuisine: "spanish"

- action: utter_ask_num_peopleStories example from: #https://rasa.com/docs/rasa/stories. 👆

Rasa’s approach seems quite counter intuitive…instead of defining conditions and rules for each node, the chatbot is presented with real conversations. The chatbot then learns from these conversational sequences, to manage future conversations.

These different conversations, referred to as Rasa Stories, are the training data employed for creating the dialog management models.

2. Intents

Intent deprecation has been introduced by Rasa, IBM, Microsoft and Alexa. Even if only in an experimental and limited capacity.

The reason behind this is that a finite list of intents are usually defined.

Subsequently every single user request needs to me mapped or matched to a single pre-defined intent. This is an arduous task to segment the chatbot’s domain of concern into different intents.

Simultaneously ensuring that there is no overlap or gaps with the defined intents. But, what if we could go directly from user utterance to meaning? To the best matching dialog for the user utterance?

Traditionally each and every conceivable user input needs to be assigned to a particular intent. During transcript review, if user input does not neatly match an existing intent, an intent needs to be invented.

This strait-laced layer of categorizing a user utterance according to an intent is rigid and inflexible in the sense of being a set of categories which manages the conversation.

Hence, within a chatbot the first line of conversation facilitation is intent recognition.

And herein lies the challenge, in most chatbot platforms there is a machine learning model of sorts used to assign a user utterance to a specific intent.

Intents are also a rigid layer within a chatbot. Any conceivable user input needs to be anticipated and mapped to an single intent.

And from here the intent is tied to a specific point in the state machine (aka dialog tree). As you can see from the sequence below, the user input “I am thinking of buying a dog.” is matched to the intent Buy Dog. And from here the intents are hardcoded to dialog entry points.

Intents are also a rigid layer within a chatbot. Any conceivable user input needs to be anticipated and mapped to an single intent.

Again, the list of intents is rigid and fixed. Subsequently each intent is linked to a portion of the pre-defined dialog.

User input is matched to one intent. The identified intent is part of a fixed list of intents. In turn, each intent is assigned to a portion of the dialog.

User input is matched to one intent. The identified intent is part of a fixed list of intents. In turn, each intent is assigned to a portion of the dialog.

But, what if the layer of intents can be deprecated and user input can be mapped directly to the dialog?

This development is crucial in order to move from a messaging bot to a conversational AI interface.

This layer of intents is also a layer of translation which muddies the conversational waters.

Having intents optional, and running the two approaches in parallels, allows for conversation to use or bypass intents.

3. Chabot Text or Return Dialog (NLG)

Natural Language Generation is the unstructuring of structured data into a conversational format.

The script or dialog the chatbot returns and presents to the user is also well defined and rigid.

Restaurant review is created from a few key words and the restaurant name.

The wording returned by the chatbot is very much linked one-to-one, to a specific dialog state node.

With each dialog node having a set response.

GPT-3 is seeking to change this, and they have depreciated all three of these elements.

There is no need for defined entities, the dialog state management and conversation context, just happens.

And lastly, the return dialog or wording is deprecated with real-time Natural Language Generation (NLG).

Whilst GPT-3 has made this leap, which makes for an amazing demo and prototype, there are some pitfalls.

Some pitfalls are:

- Large amount of training data is required in some cases.

- Aberrations in NLG can result in a bad user experience.

- Tone, bot personality and quality responses might be lost.

Conclusion

GPT-3 has indeed depreciated all three of these elements, intents, dialog state management and the bot response text/dialog.

But at the cost of fine-tuning, forms and policies & using a small amount of training data.

A balance needs to be found, where the complexity under the hood, is surfaced via a simple and effective development interface. Without trapping users in a low-code only environment without the option to fine-tune and tweak the framework.