Mitigating Hallucination

Search engines & applications are attached to data sources. Even-though Large Language Models (LLMs) hold vast amounts of knowledge, it lacks specific contextual information. So why not attach it to a data source for contextual reference?

I’m currently the Chief Evangelist @ HumanFirst. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.

Four years ago I wrote about the need for chatbots to be contextually aware. We are back at that same point now with LLMs where the importance of contextual reference data needs to be emphasised.

LLMs have a vast corpus of data it has been trained on, but there are two problems with this huge time-stamped model;

- The model is frozen in time, and any subsequent information or developments are excluded.

- Every conversation or question have a specific context which needs to be referenced in the conversation.

LLM hallucination is when the LLM returns highly plausible and coherent answers but is factually incorrect. It is not the case that the training data is contaminated or necessarily incorrect. It is in most cases the problem is the lack of context.

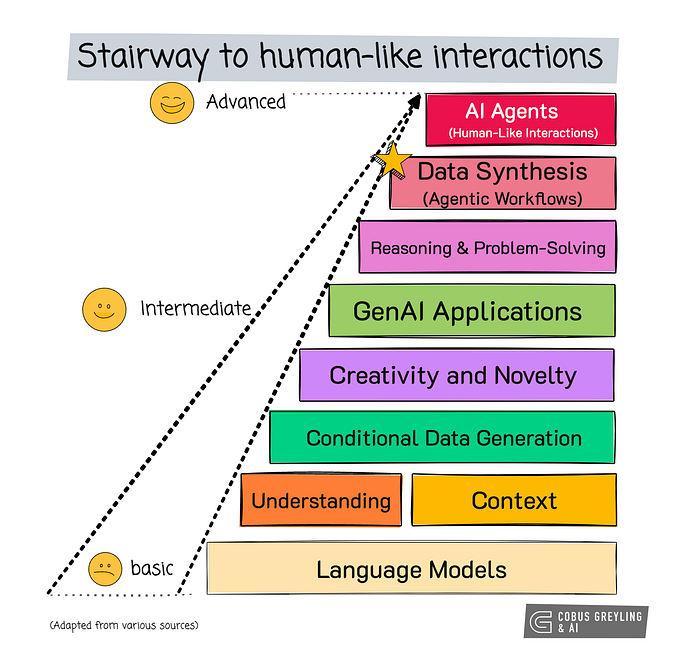

As shown in the header image, LLMs have many characteristics which makes it an indispensable layer in a conversational UI stack. All of these are complimented with a contextual reference.

Consider the contextual reference below, which is a single chunk of text taken from a larger document hosted by HuggingFace.

The Statue of Zeus at Olympia was a giant seated figure, about 12.4 m

(41 ft) tall,[1] made by the Greek sculptor Phidias around 435 BC at the

sanctuary of Olympia, Greece, and erected in the Temple of Zeus there.

Zeus is the sky and thunder god in ancient Greek religion, who rules as

king of the gods of Mount Olympus. The statue was a chryselephantine

sculpture of ivory plates and gold panels on a wooden framework. Zeus

sat on a painted cedarwood throne ornamented with ebony, ivory, gold,

and precious stones. It was one of the Seven Wonders of the Ancient World.

The statue was lost and destroyed before the end of the 5th century AD,

with conflicting accounts of the date and circumstances. Details of its

form are known only from ancient Greek descriptions and representations

on coins. Coin from Elis district in southern Greece illustrating the

Olympian Zeus statue (Nordisk familjebok) History[edit] The statue of

Zeus was commissioned by the Eleans, custodians of the Olympic Games,

in the latter half of the fifth century BC for their newly constructed

Temple of Zeus. When the question is asked: What was made of chryselephantine? with an instruction and supplied context, as seen below, a correct and comprehensive answer is given:

Instruction: answer the question below by referencing the context supplied.

Question: What was made of chryselephantine?

Context: The Statue of Zeus at Olympia was a giant seated figure, about 12.4 m (41 ft) tall,[1] made by the Greek sculptor Phidias around 435 BC at the sanctuary of Olympia, Greece, and erected in the Temple of Zeus there. Zeus is the sky and thunder god in ancient Greek religion, who rules as king of the gods of Mount Olympus. The statue was a chryselephantine sculpture of ivory plates and gold panels on a wooden framework. Zeus sat on a painted cedarwood throne ornamented with ebony, ivory, gold,

and precious stones. It was one of the Seven Wonders of the Ancient World. The statue was lost and destroyed before the end of the 5th century AD, with conflicting accounts of the date and circumstances. Details of its form are known only from ancient Greek descriptions and representations on coins. Coin from Elis district in southern Greece illustrating the Olympian Zeus statue (Nordisk familjebok) History[edit] The statue of Zeus was commissioned by the Eleans, custodians of the Olympic Games,

in the latter half of the fifth century BC for their newly constructed

Temple of Zeus.

Answer: In the two examples below the model google/flan-t5-xxl, hosted by HuggingFace, is used in both instances on the right and left.

On the left, no RAG or contextual reference approach is taken. However, on the right the prompt is given an a correct answer is given by the LLM.

Below the same question is posed to the gpt-3.5-turbo; a comprehensive answer is given sans any contextual reference or RAG approach.

This does not necessarily mean that the GPT-3.5 model will never hallucinate, but it is clear that the OpenAI models are better in fielding questions without any contextual reference.

Lastly, another advantage of RAG is that a Generative Application can become LLM agnostic; where LLMs become a utility and Gen Apps can pivot to more cost efficient LLMs and any lack of performance can be negated via RAG.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.