Why The Focus Has Shifted from AI Agents to Agentic Workflows

We find ourselves on a stairway from where Large Language Models were introduced to AI Agents with human like digital interactions. But…

…there has a shift when it comes to commercial implementations with focus moving form AI Agents in favor of Agentic Workflows/Data Synthesis.

Why is the focus moving away from AI Agents (for now)?

Companies like Salesforce and Service made hard pivots to AI Agents, however, the stark reality of AI Agents is that the technology is not where it should be in terms of accuracy.

If one looks past the marketing hype, and the great prototypes and demos there are of AI Agents, their accuracy is not yet suited for production.

The 𝗖𝗹𝗮𝘂𝗱𝗲 𝗔𝗜 𝗔𝗴𝗲𝗻𝘁 𝗖𝗼𝗺𝗽𝘂𝘁𝗲𝗿 𝗜𝗻𝘁𝗲𝗿𝗳𝗮𝗰𝗲 (𝗔𝗖𝗜) performance sits at 14% of that of human performance.

The graph below from TheAgentFactory is an indication of where AI Agents sit in term of cost, steps and success rate. Notice how the success rate is around 20%.

These figures are the stark reality of the current situation.

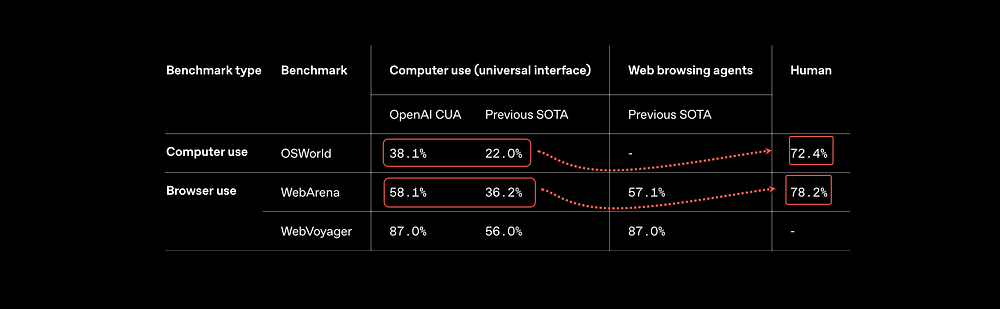

With the recent release of the OpenAI Operator, computer use and web browser use accurate was reached of 30 to 50%, but this is still lagging below the 70%+ of human capability.

And added to this, there are interesting studies on how AI Agents with web browsing is susceptible and easy prey for attacks via nefarious pop-ups.

There are two avenues for AI Agents to perform tasks like humans; the one is via a web browser (Webvoyager, OpenAI Operator, etc). The second is via the complete GUI of the OS (Anthropic).

These approaches makes use of the GUI as the API for the AI Agents.

Initial approaches looked at using individual APIs, but this is not practical due to the overhead of developing each and every API integration. Also, for many commercial applications there exists no API.

Why the focus on Agentic Workflows

Everyone is agreeing that modern knowledge work is broken, with various numbers being stated. One of the reports stated that workers spend 30% of their time searching for information.

There is also a challenge for knowledge workers in answering complex questions and needing to synthesise information from various documents.

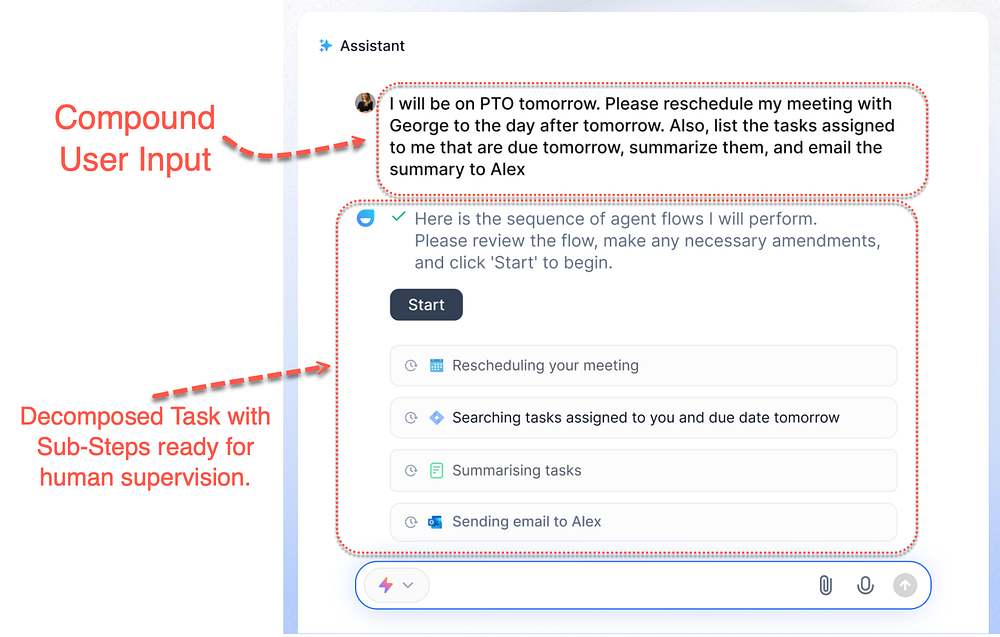

Agentic Workflows (as shown in the image below) allows for reasoning and decomposing complex tasks into simpler sub-tasks and chaining these tasks together in a sequence.

By executing this sequence, elements like observability, inspectability and discoverability are introduced.

The synthesis of data will become increasingly important. Agentic Workflows for knowledge workers is one such example where work data and resources can be synthesised for the worker into one answer.

Language Model providers are moving away from only offering the model, as such. But also extending into User Experience. Deep Research in ChatGPT is not a new model, but rather a new agentic capability within ChatGPT which conducts multi-step research on the internet for complex tasks. It accomplishes in tens of minutes what would take a human many hours.

This is also a good example of how disparate sources of data is synthesised to answer a user question.

I feel this is something LlamaIndex coined, the idea of Agentic RAG, where the notion is that synthesising data for an “audience of one” for a particular point in time will become important.

In the coming months there will be immense focus on personal agentic workflows, information synthesis, something you could call desktop orchestration.

Reasoning & Problem-Solving

Modern AI models are increasingly integrating reasoning as a core feature, enabling them to tackle complex problems by breaking them down into manageable components.

This shift is underpinned by an innovative approach that involves decomposing questions into smaller subsets, allowing the model to address each part systematically.

By treating reasoning as an internal mechanism, these models can simulate human-like thought processes, enhancing their ability to provide accurate and nuanced responses.

The decomposition strategy not only improves problem-solving efficiency but also fosters greater transparency in how conclusions are reached.

As a result, users benefit from more interpretable outputs, bridging the gap between advanced computation and understandable decision-making.

Initially users had to include reasoning traits in their prompt, instructing the model how to reason and decompose complex or compound tasks. And by giving examples via a few-show approach, for the model to emulate.

In Closing

Organisations must shift their focus from fixating on specific tools or trends — like those that once branded themselves as RAG companies, Prompt Engineering playgrounds and more, and instead prioritise solving real-world business challenges.

The world is moving forward at an unprecedented pace, with new technologies emerging almost daily, each promising to revolutionise industries.

But, the true measure of innovation lies not in mastering the latest technology but in applying these advancements to create tangible value.

Whether it’s improving customer experiences, streamlining operations, or addressing societal needs, the question remains, how can we leverage technology to deliver meaningful solutions?

By adopting this mindset, businesses can future-proof themselves and ensure they remain relevant amid ever-changing tides of progress.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.