Prompt Pipelines

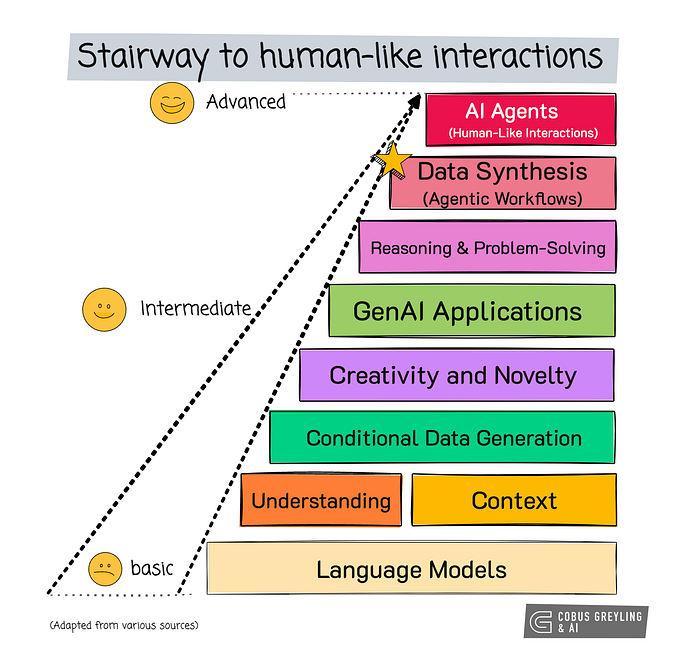

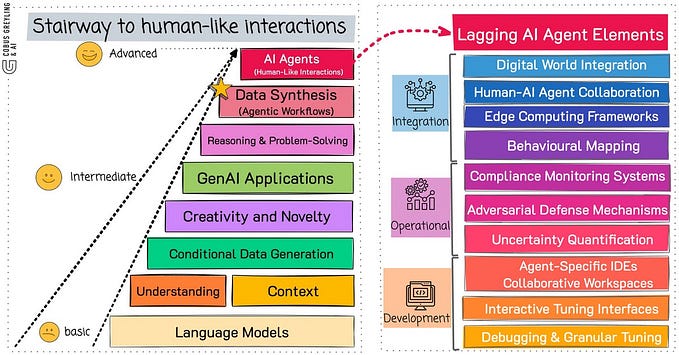

LLM-based applications can take the form of autonomous agents, prompt chaining or prompt pipelines. These approaches make use of one or more underlying prompt template.

Introduction

In the case of autonomous agents and prompt chaining, there is usually interaction between the user and the generative AI application. Hence a dialog of sorts between the interface/application and the user takes place, with a number of dialog turns.

Generally a prompt pipeline is a sequence of stages or events which are initiated or kicked-off based on a predetermined event or API call.

Considering the image above, the idea behind a prompt pipeline GUI is to provide a user friendly interface for creating a sequence of events. Constructing prompt pipelines this way allows for easy reuse of components.

Simple LangChain Example

Prompt Pipelines extend prompt templates by automatically injecting contextual reference data for each prompt.

The Python code below is a simple application illustrating how a prompt pipeline can be built using LangChain.

pip install langchain

pip install openai

import os

import openai

os.environ['OPENAI_API_KEY'] = str("your-openai-key-goes-here")

prompt = (

PromptTemplate.from_template("Tell me a joke about {topic}")

+ ", make it funny"

+ "\n\nand in {language}"

)

prompt

PromptTemplate(input_variables=['language', 'topic'], output_parser=None, partial_variables={}, template='Tell me a joke about {topic}, make it funny\n\nand in {language}', template_format='f-string', validate_template=True)

prompt.format(topic="sports", language="Afrikaans")

from langchain.chains import LLMChain

from langchain.chat_models import ChatOpenAI

model = ChatOpenAI()

chain = LLMChain(llm=model, prompt=prompt)

chain.run(topic="sports", language="Afrikaans")With the output below:

Hoe noem jy 'n skaap wat rugby speel?

'N "Baaa-ll" speler!No-Code Example

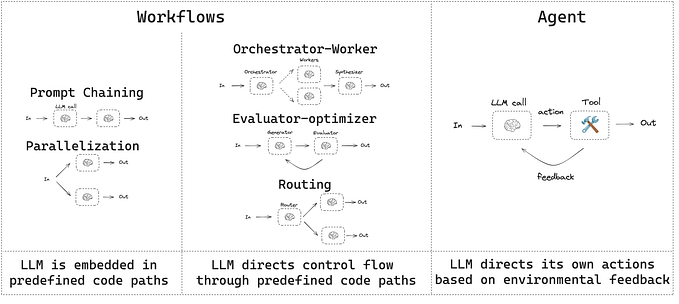

Below is an example where prompt pipeline stages are configured via a GUI.

In this example, each stage performs a different transformation on the data before passing the result to the next stage in the pipeline. For example, you can extract the entity values before processing other data properties.

Below is a list of possible prompt pipeline stages, including extracting snippets, traits in the data, custom scripts, entity extraction and more.

RAG

The most common use-case for prompt pipelines is QnA together with Retrieval Augmented Generation (RAG).

Key elements along the prompt pipeline can include Keyword Extraction to automatically detect important words from the text stored in a field.

Or extracting traits in the user input, which is specific entities, attributes, or details that the search users might use in their input.

Entity Extraction uses NLP techniques to identify named entities from the source field.

Semantic Meaning analysis is the technique to understand the meaning and interpretation of words, signs, and sentence structure.

Custom Scripts allows you to enter scripts to perform any field mapping tasks like deleting or renaming fields.

Lastly

In Machine Learning a pipeline can be described as an end-to-end construct, which orchestrates a flow of events and data.

The pipeline is kicked-off or initiated by a trigger; and based on certain events and parameters, a flow is followed which results in an output.

In the majority of prompt pipelines, the flow is initiated by a user request. The request is directed to a specific prompt template which is injected with highly contextual information for inference.

Being able to extract a suitable chunk in terms of semantic accuracy and size, demands a process of data chunking and indexing.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore.ai. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.