Practical Examples of OpenAI Function Calling

Here are three use-cases for OpenAI Function Calling with practical code examples.

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.

Introduction

Large Language Models (LLMs), like conversational UIs in general, is efficient at receiving highly unstructured data in the form of conversational natural language.

This unstructured data is then structured, processed and subsequently unstructured again in the form of natural language output.

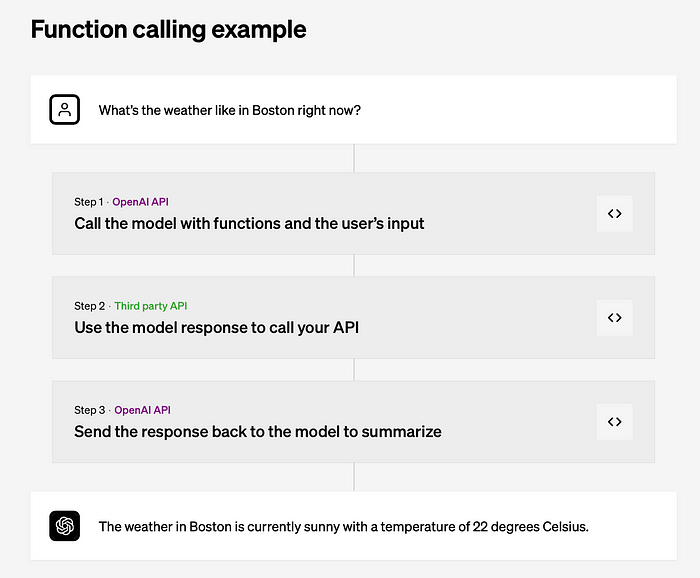

OpenAI Function Calling structures output for machine consumption in the form of an API, as apposed to human consumption in the form of unstructured natural language.

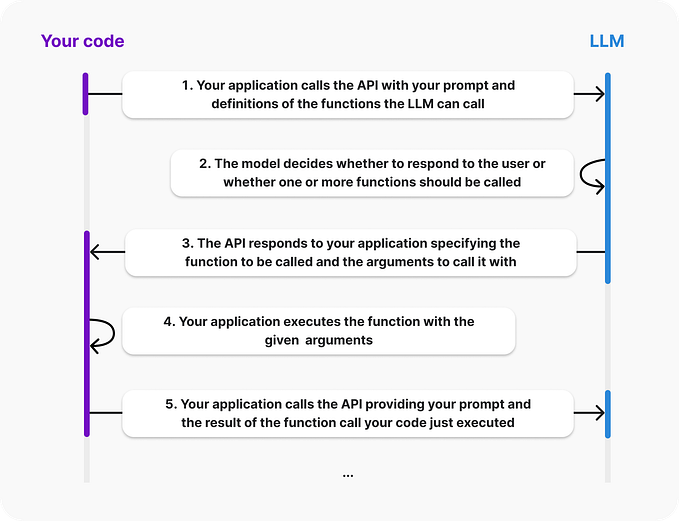

Function Calling

With the API call, you can provide functions to gpt-3.5-turbo-0613 and gpt-4–0613, and have the model intelligently generate a JSON object containing arguments which you can then in turn use to call the function in your code.

The Chat Completion API does not call the function directly; instead it generates a JSON document which you can use in your code.

Read more about OpenAI Function Calling here.

With OpenAI Function Calling, the focus is on the JSON output.

Here are three practical examples of function calling:

Create chatbots that answer questions by calling external APIs:

In this example, an email is compiled with a few fields defined, which need to be populated from the natural language input.

If the JSON document is not defined correctly, an error will be raised by the OpenAI model. Hence there is a level of rigidity in terms of defining the JSON structure which needs to be populated.

pip install openai

import os

import openai

import requests

import json

openai.api_key = "xxxxxxxxxxxxxxxxxx"

url = "https://api.openai.com/v1/chat/completions"

payload = json.dumps({

"model": "gpt-4-0613",

"messages": [

{

"role": "user",

"content": "Send Cobus from HumanFirst AI an email and ask him for the sales forecast spreadsheet. Schedule the mail for tomorrow at 12 noon."

}

],

"functions": [

{

"name": "send_email",

"description": "template to have an email sent.",

"parameters": {

"type": "object",

"properties": {

"to_address": {

"type": "string",

"description": "To address for email"

},

"body": {

"type": "string",

"description": "Body of the email"

},

"date": {

"type": "string",

"description": "the date the email must be sent."

},

"time": {

"type": "string",

"description": "the time the email must be sent."

}

}

}

}

]

})

headers = {

'Content-Type': 'application/json',

'Authorization': 'Basic xxxxxxxxxxxx'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)

And the response from the model:

{

"id": "chatcmpl-7RjMh7I0rVmCJkTCk4wvHDSNg8uQQ",

"object": "chat.completion",

"created": 1686843595,

"model": "gpt-4-0613",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": null,

"function_call": {

"name": "send_email",

"arguments": "{\n \"to_address\": \"Cobus@HumanFirst.ai\",\n \"body\": \"Hello Cobus, \\n\\nCould you please provide the sales forecast spreadsheet? \\n\\nBest Regards\",\n \"date\": \"tomorrow\",\n \"time\": \"12 noon\"\n}"

}

},

"finish_reason": "function_call"

}

],

"usage": {

"prompt_tokens": 118,

"completion_tokens": 65,

"total_tokens": 183

}

}Convert unstructured natural language into API calls:

In this example an order is converted into a JSON structure with date, notes, order type, delivery address, etc.

url = "https://api.openai.com/v1/chat/completions"

payload = json.dumps({

"model": "gpt-4-0613",

"messages": [

{

"role": "user",

"content": "I would like to order two packs of cans, and have it delivered to 22 Fourth Avenue, Woodlands. I need this on the 27th of July. Just leave the order at the door, we live in a safe area."

}

],

"functions": [

{

"name": "order_detail",

"description": "template to capture an order.",

"parameters": {

"type": "object",

"properties": {

"to_address": {

"type": "string",

"description": "To address for the delivery"

},

"order": {

"type": "string",

"description": "The detail of the order"

},

"date": {

"type": "string",

"description": "the date for delivery."

},

"notes": {

"type": "string",

"description": "Any delivery notes."

}

}

}

}

]

})

headers = {

'Content-Type': 'application/json',

'Authorization': 'Basic xxxxxxxxxxx'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)And the response:

{

"id": "chatcmpl-7RjQA8bEiPVRwh72gNUFouBq6urjo",

"object": "chat.completion",

"created": 1686843810,

"model": "gpt-4-0613",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": null,

"function_call": {

"name": "order_detail",

"arguments": "{\n \"to_address\": \"22 Fourth Avenue, Woodlands\",\n \"order\": \"two packs of cans\",\n \"date\": \"27th of July\",\n \"notes\": \"Just leave the order at the door, we live in a safe area.\"\n}"

}

},

"finish_reason": "function_call"

}

],

"usage": {

"prompt_tokens": 132,

"completion_tokens": 61,

"total_tokens": 193

}

}Extract structured data from text:

This final example has a list of names and birthdays, which needs to be structured into JSON. Something I found interesting, is that the model does not iterate through the names and dates.

Only the first name and date is placed within the JSON, hence the set of data is not recognised and itterated through.

url = "https://api.openai.com/v1/chat/completions"

payload = json.dumps({

"model": "gpt-4-0613",

"messages": [

{

"role": "user",

"content": "John has a birthday on 5 April and Adam has his on the 1 September, Elli is 24 December, Robert has his birthday on 6 March and lastly Adam is 15 April."}

],

"functions": [

{

"name": "birthdays",

"description": "create a document of names and birthdays of 5 people",

"parameters": {

"type": "object",

"properties": {

"name": {

"type": "string",

"description": "The name of the person"

},

"birthday": {

"type": "string",

"description": "and the date of the their birthday"

}

}

}

}

]

})

headers = {

'Content-Type': 'application/json',

'Authorization': 'Basic xxxxxxxxxxxx'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)And the result:

{

"id": "chatcmpl-7RjYzH9XaSAQ3Qn6X5j11J77vBGQX",

"object": "chat.completion",

"created": 1686844357,

"model": "gpt-4-0613",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": null,

"function_call": {

"name": "birthdays",

"arguments": "{ \"name\": \"John\", \"birthday\": \"5 April\" }"

}

},

"finish_reason": "function_call"

}

],

"usage": {

"prompt_tokens": 111,

"completion_tokens": 21,

"total_tokens": 132

}

}In Conclusion

There are a few considerations, however:

- Programmatically the chatbot/Conversational UI will have to know that the output to the LLM must be in JSON format. Hence there will have to be some classification or intent recognition of sorts to detect output type should be JSON.

- A predefined template will have to exist and be defined, for input to the completion LLM. As seen in the examples, the JSON template guides the LLM on how to populate the values.

- More importantly, as seen in the examples, the parameters which should be populated, must be well defined. Failure results in the following error:

“We could not parse the JSON body of your request. (HINT: This likely means you aren’t using your HTTP library correctly. The OpenAI API expects a JSON payload, but what was sent was not valid JSON. If you have trouble figuring out how to fix this, please contact us through our help center at help.openai.com.)”

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.