PAL: Program-Aided Large Language Models

The Program-Aided Language Model (PAL) method uses LLMs to read natural language problems and generate programs as reasoning steps. The code is executed by an interpreter to produce the answer.

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language. Including NLU design, evaluation & optimisation. Data-centric prompt tuning and LLM observability, evaluation and fine-tuning.

Two years ago, reasoning was still seen as a major obstacle for Large Language Models (LLMs) to overcome…of late, LLMs have shown impressive results in the area of common sense reasoning.

Recently, large language models (LLMs) have exhibited an impressive capacity for arithmetic and symbolic reasoning when given a few examples to establish a contextual framework.

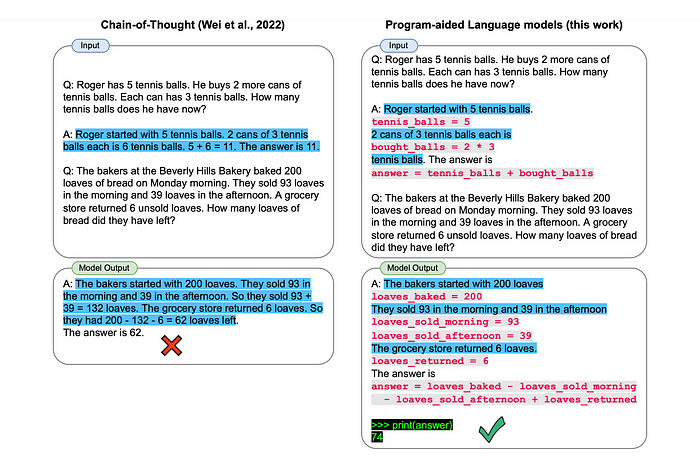

This success can be largely attributed to prompting methods such as “chain-of-thought”, which make use of LLMs to understand the problem description by breaking it down into individual steps, and then solving each step.

LLMs have a tendency to be proficient in the process of decomposition into steps, however they often make mistakes regarding logic and arithmetics during the solution stage, even if the problem was broken down properly.

⭐️ Please follow me on LinkedIn for updates on LLMs ⭐️

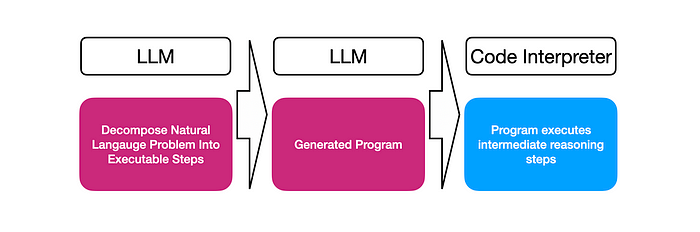

The image below shows the basic sequence of events for PAL..

- The LLM decompose the Natural Language problem into executable steps.

- A computer program (code) is generated and a code interpreter executes the code and yields a result.

The difference between Chain-Of-Thought reasoning and PAL is shown below in an astute side-by-side comparison. You can see the chain-of-thought reasoning example given on the left, and the PAL approach on the right.

⭐️ Please follow me on LinkedIn for updates on LLMs ⭐️

Another practical example from the research:

The complete working code is shown below with the portion where OpenAI is installed, imported and the environment variable set for the API Key.

The question is defined and assigned to the variable question :

Jan has three times the number of pets as Marcia. Marcia has two more pets than Cindy. If Cindy has four pets, how many total pets do the three have?

pip install langchain

pip install openai

import os

from langchain.chains import PALChain

from langchain import OpenAI

os.environ['OPENAI_API_KEY'] = str("xxxxxxxxxxxxxxxxx")

llm = OpenAI(temperature=0,max_tokens=512, model_name='gpt-4-0314')

pal_chain = PALChain.from_math_prompt(llm, verbose=True)

question = "Jan has three times the number of pets as Marcia. Marcia has two more pets than Cindy. If Cindy has four pets, how many total pets do the three have?"

pal_chain.run(question)The output from the 🦜🔗LangChain PAL:

> Entering new PALChain chain...

def solution():

"""Jan has three times the number of pets as Marcia. Marcia has two more pets than Cindy. If Cindy has four pets, how many total pets do the three have?"""

cindy_pets = 4

marcia_pets = cindy_pets + 2

jan_pets = marcia_pets * 3

total_pets = cindy_pets + marcia_pets + jan_pets

result = total_pets

return result

> Finished chain.

'28In Conclusion

The output of PAL and Agents might seem similar at first glance. The main differences between PAL and Agents are:

- Agents need to have various tools assigned to it; the agent in turn leverage assigned tools to come to a final conclusion.

- Agent tools can also include Math related libraries, search, Human-In-The-Loop, LLMs and more.

- PAL, like Agents, does a decomposition of the problem.

- However, in the case of PAL, the challenge is decomposed into steps of a program. The program is then executed by an interpreter as a program.

⭐️ Please follow me on LinkedIn for updates on LLMs ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.

PAL: Program-aided Language Models