OpenAI Assistant With Retriever Tool

Assistants are really an OpenAI version of Autonomous Agents. The vision of OpenAI with the Assistant API is to help developers build powerful AI assistants capable of performing a variety of tasks via an array of tools.

Some Background

Playing around with OpenAI’s Assistant API, I get the sense that OpenAI considers the Assistant as a LLM-based agent, or autonomous agent making use of tools.

To create an Assistant you need to specify a model the Assistant must use.

The instructions and content parameters can be used to describe the Assistant in natural language. With instructions the goals of the Assistant can be defined and is analogous to system messages for chat.

The OpenAI assistant can have access to up to 128 tools, these tools can be hosted by OpenAI or it could be third party tools which is accessed via function calling.

Currently there are two OpenAI based tools, being code_interpreter and retrieval.

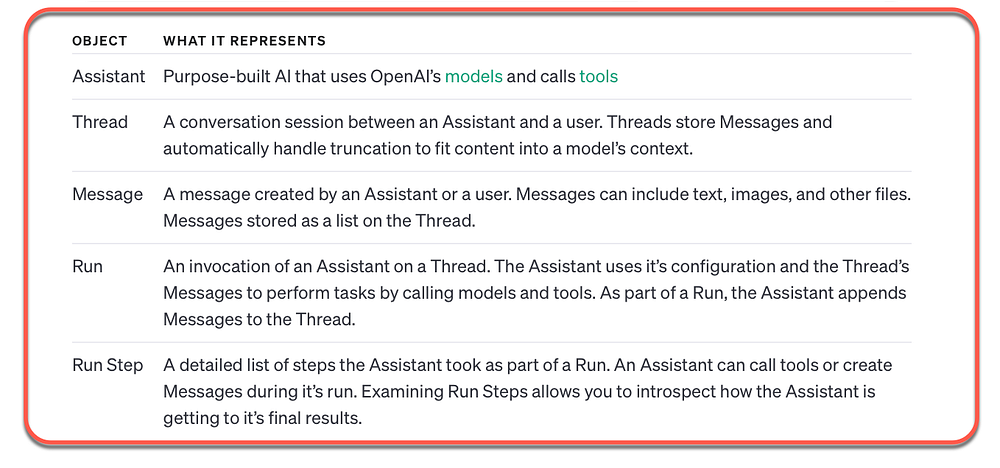

The table below from OpenAI defines the building blocks of an Assistant/Agent in their world.

What Can Assistants Do?

Assistants can access OpenAI models with instructions describing the personality and capabilities of the Assistant. In the case of Agents in the context of other frameworks, like LangChain, a meta prompt of sorts is at the heart of the agent.

So this approach of OpenAI is very much a prompt-less approach.

The Assistant can access multiple tools in parallel, both OpenAI hosted tools and custom tools accessed via function calling.

Conversation and dialog management is performed via threads. Threads are persistent and stores message history. Truncation of conversations is also performed when conversations get too long for the models context length.

A thread is created once, and messages are appended to the threat as the conversations ensues.

Retrieval Tool

The Assistant’s capabilities are expanded through retrieval, incorporating external knowledge beyond its inherent model. This can include proprietary product data or documents supplied by users.

When a document is uploaded and transmitted to the Assistant, OpenAI will automatically chunk the document it into smaller segments, create an index, store the embeddings, and utilise vector search to fetch pertinent information for responding to user inquiries.

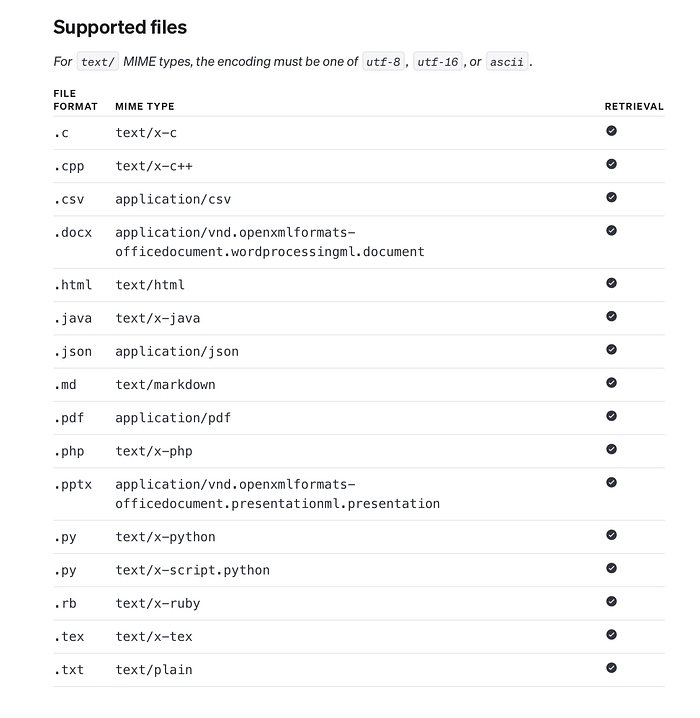

The maximum allowable file size is 512MB. Retrieval accommodates a wide range of file formats, such as .pdf, .md, .docx, and numerous others.

You can discover further information regarding the supported file extensions and their corresponding MIME-types in the “Supported files” section below.

When running the Python code via the notebook, the assistant is created in the OpenAI dashboard/playground. With Retrieval, it does take a while for the file to be processed.

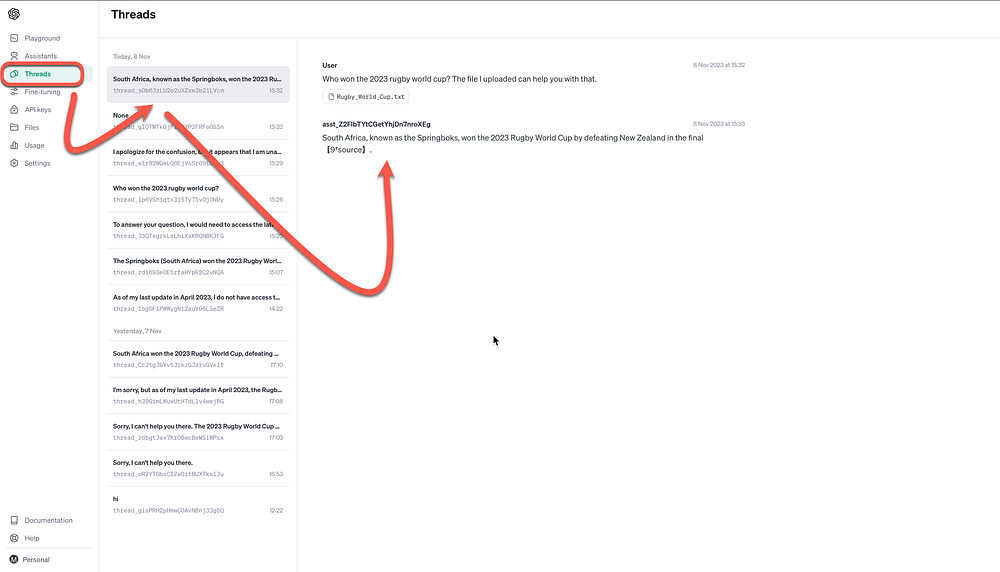

I can see a scenario where assistants are created and developed via Python and monitored and tested via the OpenAI GUI. This approach reminds somewhat of LangSmith.

Below the threads are visible on the GUI, these conversations were all conducted via the Python notebook, and the conversation threads can be inspected via the GUI.

Below is the complete code to run the OpenAI Assistant in a Python Notebook and making use of the Retriever tool. All you will need is a OpenAI API key.

pip install openai

import os

import openai

import requests

import json

from openai import OpenAI

#Pass the retrieval in the tools parameter of the Assistant to enable Retrieval

api_key = "sk-rxolBSN8pMbAaQ7DUsxlT3BlbkFsfsfsfsfswrewrgwwrAfKazZs"

client = OpenAI(api_key=api_key)

assistant = client.beta.assistants.create(

name="General Knowledge Bot",

instructions="You are a customer support chatbot. Use your knowledge base to best respond to customer queries.",

model="gpt-4-1106-preview",

tools=[{"type": "retrieval"}]

)

#File upload via Colab Notebook

from google.colab import files

uploaded = files.upload()

for name, data in uploaded.items():

with open(name, 'wb') as file:

file.write(data)

print ('saved file', name)

#Pass the retrieval in the tools parameter of the Assistant to enable Retrieval

#Accessing the uploaded

file = client.files.create(

file=open("/content/Rugby_World_Cup.txt", "rb"),

purpose='assistants'

)

api_key = "sk-rxolBSN8pMbAaQ7DUsxlT3BlbkFsfsfsfsfswrewrgwwrAfKazZs"

client = OpenAI(api_key=api_key)

assistant = client.beta.assistants.create(

name="General Knowledge Bot",

instructions="You answer general knowledge questions as accureately as possible.",

model="gpt-4-1106-preview",

tools=[{"type": "retrieval"}]

)

##Files can also be added to a Message in a Thread. These files are only accessible within this specific thread.

##After having uploaded a file, you can pass the ID of this File when creating the Message.

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="Who won the 2023 rugby world cup?",

file_ids=[file.id]

)

run = client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant.id,

instructions="You answer general knowledge questions as accureately as possible."

)

run = client.beta.threads.runs.retrieve(

thread_id=thread.id,

run_id=run.id

)

messages = client.beta.threads.messages.list(

thread_id=thread.id

)

print (messages)Below the thread with a question being asked regarding a very recent occurrence which falls outside the knowledge of the LLM, and the Assistant retrieving the data via the Retriever Tool.

"SyncCursorPage"[

"ThreadMessage"

]"(data="[

"ThreadMessage(id=""msg_nQqma6fUFnx9WhT5ilA11SxU",

"assistant_id=""asst_Z2FlbTYtCGetYhjDn7nroXEg",

"content="[

"MessageContentText(text=Text(annotations="[

],

"value=""South Africa, known as the Springboks, \n\n

won the 2023 Rugby World Cup by defeating New Zealand\n\n

in the final."")",

"type=""text"")"

],

created_at=1699450387,

"file_ids="[

],

"metadata="{

},

"object=""thread.message",

"role=""assistant",

"run_id=""run_hiQaWXIS9gIdWEV86vC3h9zE",

"thread_id=""thread_s0b6JzLU2o2uXZsw3b21LVcn"")",

"ThreadMessage(id=""msg_2zeWwRIbBiZzMp7Q1YnnVgjN",

"assistant_id=None",

"content="[

"MessageContentText(text=Text(annotations="[

],

"value=""Who won the 2023 rugby world cup? The file I uploaded can\n\n

help you with that."")",

"type=""text"")"

],

created_at=1699450378,

"file_ids="[

"file-e0GVGxhtPOOVuRdWDsd2Z8Jo"

],

"metadata="{

},

"object=""thread.message",

"role=""user",

"run_id=None",

"thread_id=""thread_s0b6JzLU2o2uXZsw3b21LVcn"")"

],

"object=""list",

"first_id=""msg_nQqma6fUFnx9WhT5ilA11SxU",

"last_id=""msg_2zeWwRIbBiZzMp7Q1YnnVgjN",

"has_more=False)"Below, you see the response from the Assistant, without access to the Retriever tool.

"SyncCursorPage"[

"ThreadMessage"

]"(data="[

"ThreadMessage(id=""msg_ERivEkNDEb4s3UpDb6PRYQix",

"assistant_id=""asst_ouXMdIOna0blYE7Zm7kRKtp1",

"content="[

"MessageContentText(text=Text(annotations="[

],

"value=""As of my last update in April 2023, I do not have access \n\n

to real-time data, including current events or sports results.\n\n

To find out the latest information on the winner of the\n\n

2023 Rugby World Cup, I would recommend checking the latest\n\n

news on sports news websites, official tournament information,\n\n

or using a search engine for the most recent updates.\n\n

If you have access to these resources, they can provide you\n\n

with the answer you're looking for."")",

"type=""text"")"

],

created_at=1699450734,

"file_ids="[

],

"metadata="{

},

"object=""thread.message",

"role=""assistant",

"run_id=""run_3NhubSHCarv5593E7IhlsvY9",

"thread_id=""thread_logOF1PWWygNtZauY96LSeZR"")",

"ThreadMessage(id=""msg_HAW7HJIpTsPk762wDIlezYRb",

"assistant_id=None",

"content="[

"MessageContentText(text=Text(annotations="[

],

"value=""Who won the 2023 rugby world cup?"")",

"type=""text"")"

]⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.