LangChain Agents With OpenAI Function Calling

Launching OpenAI’s Function Calling feature is sure to ignite a multitude of creative implementations and applications. As an example, LangChain has adopted Function Calling in their Agents.

I’m currently the Chief Evangelist @ HumanFirst. I explore & write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.

Introduction

Large Language Models (LLMs), like Conversational UIs in general, is optimised to receive highly unstructured data in the form of conversational natural language.

This unstructured data is then structured, processed and subsequently unstructured again in the form of natural language output. Converting structured language into unstructured natural language output is know as Natural Language Generation (NLG).

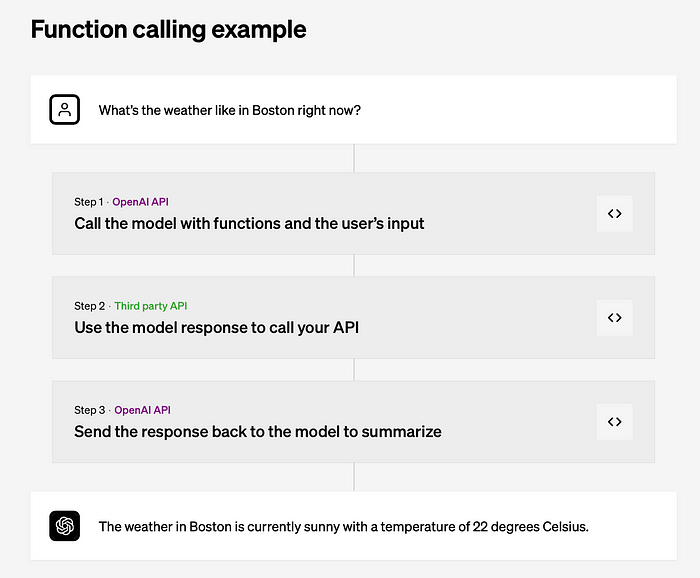

Function Calling

Read more about OpenAI Function Calling here.

With OpenAI Function Calling, the focus have been on the JSON output and how LLM output in the form of a JSON document can be directly submitted to an API.

However, as LangChain has shown recently, Function Calling can be used under the hood for agents.

Functions simplify prompts and there is also a saving on tokens, seeing that there is no need to describe to the LLM what tools it has at its disposal.

Under the hood LangChain converts the tool spec to a function tool spec.

When a result is returned from the LLM, if it contains the function_call argument, LangChain parse that into a tool. If there is no function_call the output is returned to the user.

LangChain has introduced a new type of message, “FunctionMessage” to pass the result of calling the tool, back to the LLM.

LangChain then continue until ‘function_call’ is not returned from the LLM, meaning it’s safe to return to the user!

Below is a working code example, notice AgentType.OPENAI_FUNCTIONS .

pip install openai

pip install google-search-results

pip install langchain

from langchain import LLMMathChain, OpenAI, SerpAPIWrapper

from langchain.agents import initialize_agent, Tool

from langchain.agents import AgentType

from langchain.chat_models import ChatOpenAI

import os

os.environ['OPENAI_API_KEY'] = str("xxxxxxxxxxxxxxxxxxxxxxxx")

os.environ['SERPAPI_API_KEY'] = str("xxxxxxxxxxxxxxxxxxxxxxxx")

llm = ChatOpenAI(temperature=0, model="gpt-3.5-turbo-0613")

search = SerpAPIWrapper()

llm_math_chain = LLMMathChain.from_llm(llm=llm, verbose=True)

tools = [

Tool(

name = "Search",

func=search.run,

description="useful for when you need to answer questions about current events. You should ask targeted questions"

),

Tool(

name="Calculator",

func=llm_math_chain.run,

description="useful for when you need to answer questions about math"

)

]

mrkl = initialize_agent(tools, llm, agent=AgentType.OPENAI_FUNCTIONS, verbose=True)The agent is run with the question:

mrkl.run("What is the square root of the year of birth of the founder of Space X?")An the output from the agent, in text and the notebook view:

> Entering new chain...

Invoking: `Calculator` with `sqrt(1971)`

> Entering new chain...

sqrt(1971)```text

sqrt(1971)

```

...numexpr.evaluate("sqrt(1971)")...

Answer: 44.395945760846224

> Finished chain.

Answer: 44.395945760846224The square root of the year of birth of the founder of SpaceX is approximately 44.4.

> Finished chain.

The square root of the year of birth of the founder of SpaceX is approximately 44.4.

To see what is going on under the hood, add the following line:

langchain.debug = TrueAnd below the detailed output showing the functionality.

⭐️ Follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore & write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.