How Is GPT-3 Reimagining Traditional Chatbot Architecture?

And Why This Might Not Always Be As Good As It Seems…

Introduction

Traditionally chatbot architecture can be divided into two parts:

- Natural Language Understanding (NLU) and

- Dialog Management.

NLU is used to extract intents (verbs) and entities (nouns) from user input.

The dialog portion manages the chatbot responses and the dialog state.

One could say these are the four pillars of chatbot architecture.

There has been movement to deprecate intents and the dialog state machine.

Even replacing the defined dialog with Natural Language Generation (NLG).

GPT-3 has deprecated all four these pillars:

- Defining Rigid Intents

- Defining Entities

- Bot Responses (Dialog Wording) &

- State Management.

Building a prototype is fast and the result is very impressive. The problem lies with:

- Finetuning

- Scaling

- Customizing

- Integration.

Intent & Entity Deprecation

Looking at conversational agents, also referred to as chatbots, obviously the goal is to mimic and exceed what is possible with human-to-human conversation.

However, an important part of any human-to-human conversation is intent discovery.

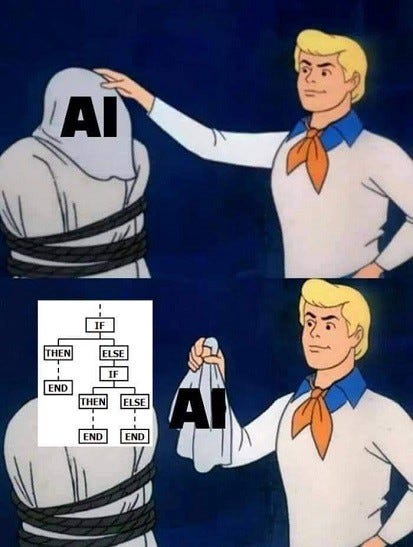

This strait-laced layer between the NLU ML model and the dialog management component is intransigent in the sense of being a conditional if-this-then-that scenario which manages the conversation.

Think of it like this; when you approach someone attending to an information desk, their prime objective is to determine the intent of your request.

Once intent is established, the conversation can lead to resolution.

And herein lies the challenge, in most chatbot platforms there is a machine learning model of sorts used to assign a user utterance to a specific intent.

And from here the intent is tied to a specific point in the state machine (aka dialog tree). As you can see from the sequence above, the user input “I am thinking of buying a dog.” is matched to the intent Buy Dog. And from here the intents are hardcoded to dialog entry points.

Deprecation Of State Machines

Machine Learning dialogs and the implicit deprecation of the state machine are here to stay. Many doubted this approach as too vague and not having states seemed a bridge too far.

If you trust a model for intent and entity detection, why not trust it for the dialog?

While other organizations still stick to the three approaches shown in the image above, Rasa is one company which is leading the way with their approach. The fact that ML stories are maturing well, and is scaling with new features, show it is ahead of its time.

Currently chatbots are seen as conversational AI and machine learning implementations.

The truth of the matter is that ML and AI only play a role in the NLU/NLP portion; to some extend.

The management of conversation state, dialog turns and bot wording are very far removed from ML/AI. It is in actual fact a state machine; stepping from one state to another based on fixed conditions.

Deprecation Of Dialog Scripts

I wrote about the principle of script deprecation in October 2019. But again, the idea is one thing, but implementation is something different.

The general term is NLG (Natural Language Generation). NLG can be seen as the inverse of NLU.

Where NLU is structuring unstructured data. NLG is the un-structuring of structured data into a conversational format. The key word for me was controllable NLG.

The focus seem to be to adapt responses to changing context…

Again the focus is on the conversational agent’s agility and scaling well.

GPT-3 excels here with natural sounding and flawless NLG. The challenge is in different languages and customizing the responses.

Finetuning

Herein lies the problem…

Since Open AI made text processing available with GPT-3, the question has been asked, is this the ultimate and only interface you need to create a Conversational AI interface, or chatbot? Why still bother with other frameworks and environments?

Yes, there are instances where GPT-3 can be used in a standalone mode. For instance;

- a mood-to-color chatbot,

- a fun sarcastic chatbot,

- a friend chit-chat bot etc.

Currently, GPT-3 has minimal capacity for supporting fine-tuning projects.

GPT-3 is working on building a self-serve fine-tuning endpoint that will make this accessible for all users, but concrete timelines are not available.

For now, in most production and enterprise implementations, GPT-3 will play a support role…

There are definitely good implementation opportunities for the Conversational AI aspect of GPT-3.

As a support API where text can be processed and or generated to assist existing NLU functionality, there is a very real use case.

As mentioned, GPT-3 can be a great help in pre-processing user input as a help for the NLU engine.

The challenge is that GPT-3 seems very well positioned to write reviews, compile questions and have a general conversation. This could lead to a proliferation of bots writing reviews, online adds and general copywriting tasks.

This automation does not need to be malicious in principle. Open AI is seemingly making every effort to ensure the responsible use of the API’s.

The fact the extensive training is not required, and a few key words or phrases can point the API in the right direction, is astounding.

There are however opensource alternatives for most of the functionality available.

import openai

openai.api_key = "xxxxxxxxxxxxxxxxxx"

response = openai.Completion.create(

engine="davinci",

prompt="The following is a conversation with an AI assistant. The assistant is helpful, creative, clever, and very friendly.\n\nHuman: Hello, who are you?\nAI: I am an AI created by OpenAI. How can I help you today?\nHuman: What is RAM?\nAI:",temperature=0.9,

max_tokens=150,

top_p=1,

frequency_penalty=0.0,

presence_penalty=0.6,

stop=["\n", " Human:", " AI:"]

)

print (response)

As seen above, creating a chatbot with 14 lines of code is impressive at first, but the elements mentioned demand serious consideration.

Conclusion

A low-code approach is always attractive for collaboration, rapid development, quick upskilling etc.

When it comes to scaling, integration, customization and enterprise demands, such an approach just does not suffice.