Alexa Conversations Is A New AI-Driven Approach To Conversational Interfaces

But Does It make The Conversational Experiences More Natural?

Introduction

Looking at the chatbot development tools and environments currently available, there are three ailments which require remedy:

- Compound Contextual Entities

- Entity Decomposition

- Deprecation of Rigid State Machine, Dialog Management

The aim of Alexa Conversations is to take voice interactions from one shot interactions to multi-turn interactions. More complex conversations like booking a flight, ordering food or banking demands multi-turn conversations.

One could say conversational commerce demands an environment to develop multi-turn conversations fast and efficient. Amazon must have recognized this and Alexa Conversations is their foray into addressing this need.

Compound Contextual Entities

Huge strides have been made in this area and many chatbot ecosystems accommodate these.

Contextual Entities

The process of annotating user utterances is a way of identifying entities by their context within a sentence.

Often entities have a finite set of values which are defined. Then there are entities which cannot be represented by a finite list; like cities in the world or names, or addresses. These entity types have too many variations to be listed individually.

For these entities, you must use annotations; entities defined by their contextual use. The entities are defined and detected via their context within the user utterance.

Compound Entities

The basic premise is that users will utter multiple entities in one sentence.

Users will most probably express multiple entities within one utterance; referred to as compound entities.

In the example below, there are four entities defined:

- travel_mode

- from_city

- to_cyt

- date_time

These entities can be detected within the first pass and confirmation solicited from the user.

Entity Decomposition

The Microsoft LUIS Approach

Entity decomposition is important for both intent prediction and for data extraction with the entity. The best way to explain this is by way of an example.

We start by defining a single entity, called

- Travel Detail.

Within this entity, we defined three sub-entities. You can think of this as nested entities or sub-types. The three sub-types defined are:

- Time Frame

- Mode

- City

From here, we have a sub-sub-type for City:

- From City

- To City

The leader in entity decomposition is Microsoft LUIS, you can read more about it here. I would say LUIS have a complete solution in this regards.

Amazon Alexa Conversations

Conversations have a similar option, though not as complete and comprehensive as LUIS. Within conversations you can define entities, which Amazon refers to Slots.

The aim during the conversations is to fill these slots (entities). Within conversations you can create a slot with multiple properties attached to it. These properties can be seen as sub-slots or sub-categories which together constitute the higher order entity.

Alexa Conversations introduces a new slot type custom with properties (PCS).

Constituting a collection of slots which are hierarchical. This can be used to pass structured data between build-time components such as API Definitions and response templates.

Deprecation Of Rigid State Machine Dialog Management

Deprecating the state machine for dialog management demands a more abstract approach; many are not comfortable of relinquishing control to an AI model.

The aim of Alexa Conversations (AC) is to furnish developers with the tools to build a more natural feeling Alexa skill with fewer lines of code. AC is an AI-driven approach to dialog management that enables the creating of skills that users can interact with in a natural unconstrained manner. This AI-driven

approach is more abstract, but more conversation driven from a development process. Sample dialogs are important, together with annotation of data.

You provide Alexa with a set of dialogs to demonstrate the functionalities required for the skill.

The build time systems behind Alexa Conversations will take the dialogs and create thousands of variations of these examples. This build process takes quite a while to complete.

Fortunately any errors are surfaced at the start of the process, which is convenient.

AC builds a statistical model which interpret customer inputs & predict the best response from the model.

From that information, AC will be able to make accurate assumptions .

AC uses AI to bridge the gap between voice application you can build manually and the vast range of possible conversations.

Framework Components

The five build-time components are:

- Dialogs

- Slots

- Utterance Sets

- Response Templates

- API Definitions

Dialogs

Dialogs are really example conversations between the user and Alexa you define. You cans see the conversation is multi-turn and complexity is really up to you to define.

For the prototype there are three entities or slots we want to capture, and four dialog examples with four utterances each were sufficient. Again, these conversations or dialogs will be used by AC to create an AI model to produce a natural and adaptive dialog model.

Slots

Slots are really the entities you would like to fill during the conversation. Should the user utter all three required slots in the first utterance, the conversation will only have one dialog turn.

The conversation can be longer of course, should it take more conversation turns to solicit the relative information from the user to fill the slots. The interesting part is the two types of slots or entities. The custom defined slots with values, and the one with properties.

Alexa Conversations introduces custom slot types with properties (PCS) to define the data passed between components. They can be singular or compound. As stated previously, compound entities or slots can be decomposed.

Compound entities which can be decomposed will grow in implementation and you will start seeing it used in more frameworks.

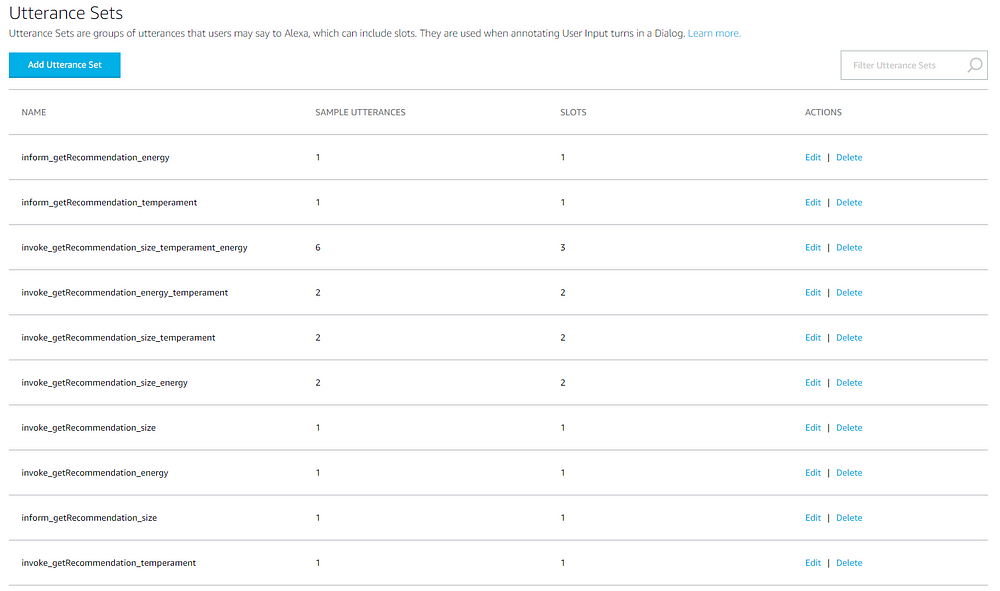

Utterance Sets

Utterance Sets are groups of utterances that users may say to Alexa, which can include slots. They are used when annotating User Input turns in a Dialog.

This is the one big drawback I see in AC, is the fact for each permutation of slots/entities, examples need to be defined.

For example:

1. abc

2. a

3. b

4. c

5. ab

6. bc

7. acFor the three slots/entities, seven example sets need to be given. Imagine how this expands, should you have more slots/entities.

Response Templates

Responses are how Alexa responds to users in the form of audio and visual elements. They are used when annotating Alexa Response turns in a Dialog.

API Definitions

API Definitions define interfaces with your back-end service using arguments as inputs and return as output.

Conclusion

AC is a definite a move in the right direction…

The Good

- The advent of compound slots/entities which can be decomposed. Adding data structures to Entities.

- Deprecating the state machine and creating an AI model to manage the conversation.

- Making voice assistants more conversational.

- Contextually annotated entities/slots.

- Error messages during the building of the model were descriptive and helpful.

The Not So Good

- It might sound negligible; but building the model takes a while. I found that the errors in my model was surfaced at the beginning of the model building process, and training stopped. Should your model have no errors, the build is long.

- Defining utterance sets are cumbersome. Creating utterance sets for all possible permutations if you have a large number of slots/entities is not ideal.

- It is complex, especially compared to an environment like Rasa. The art is to improve the conversational experience by introducing complex AI models; while simultaneously simplifying the development environment.